Anaconda Unaffected by PyTorch Security Incident

Cheuk Ting Ho

4min

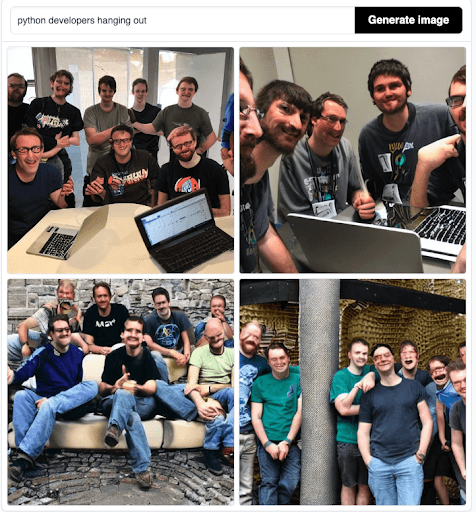

By now you’ve probably heard about Stability AI’s Stable Diffusion, a text-to-image machine learning (ML) model that generates images based on descriptive textual prompts (for more technical details, refer to its official GitHub repository). I first experimented with Stable Diffusion during a conference. My friends and I were having fun trying to turn our conference experience into images. Laughter and jokes aside, I was quick to realize that, while it was very easy to generate a picture showing, say, a male friend having a pint in a pub, it was much harder to generate a picture of myself as a female developer. In fact, it was almost impossible to get the model to display any female Python developers without specifying “female” in the prompt.

This experience did not shock me, as female developers are a minority in the tech community. However, I am concerned about this underrepresentation in the model. Will it perpetuate a self-fulfilling loop of enforcing gender bias and thus be counterproductive to the efforts our community is making to encourage diversity and inclusion? Let’s take a closer look at the hidden bias and stereotypes in the model.

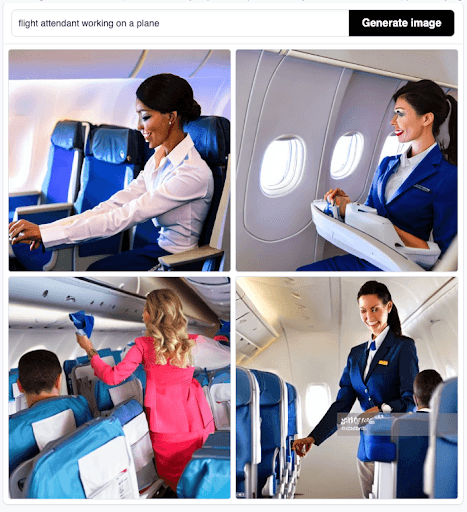

As mentioned, the first type of bias I encountered was gender stereotyping. If I enter “flight attendant working on a plane” as the prompt, most of the results display young, uniformed females smiling on a plane. What about all of the male flight attendants? I’ve traveled a lot and can confirm male flight attendants are perfectly common.

On the other hand, you can guess what the results look like if I input “mechanics fixing a car.” They feature young males as mechanics. Even a quick Google search, however, yields some images of female mechanics. Stock photography sites also offer up images that try to break gender stereotypes, wherein the mechanic fixing a car is female.

Note that I specifically chose these examples for testing because these careers are historically associated with particular genders. While there are societal efforts to break these stereotypes and these traditional associations are becoming less and less accurate, we still see a lot of bias in this model and others.

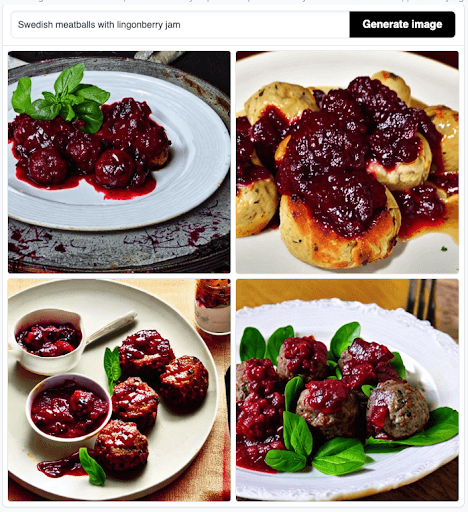

I tried to generate an image of Swedish meatballs, which are usually served with lingonberry jam on the side. Maybe you’ve tried them at Ikea? I got this idea from a keynote I attended at PyCon Sweden, in which Julien Simon spoke on a similar attempt. All of the generated pictures showed meatballs sitting in jam, as if the jam were tomato sauce, like with servings of Italian meatballs. I was surprised that the model seemed to decide that meatballs can only be served in one particular way. If only Stable Diffusion were a person and I could bring it to Ikea to try some Swedish meatballs.

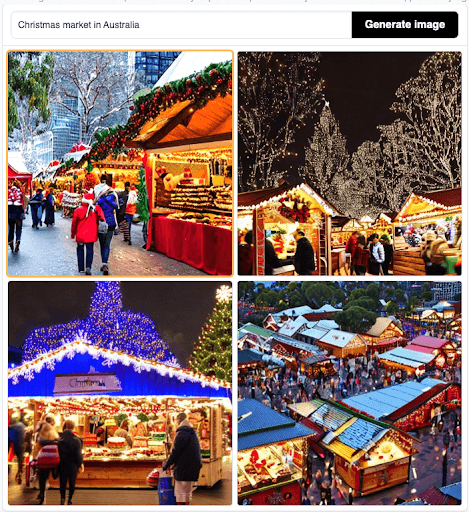

Next I tried something a little wackier. I asked Stable Diffusion to generate images of Christmas markets in Australia. You see what this is going, right? In some pictures, you can see people bundled up in warm clothes, and the trees are bare—some even have snow on them. But as we all know, Christmas occurs during the summer in Australia. Perhaps the pictures in question are meant to show markets taking place during colder months? Otherwise it seems like the model decided that Christmas must always take place in winter, which is not universally true.

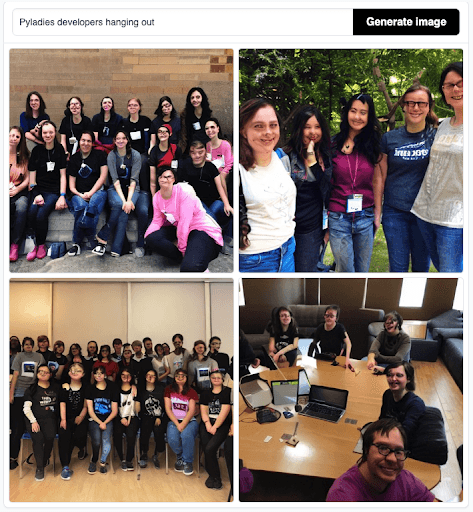

Stable Diffusion seems to have a hard time generating results that show historically underrepresented demographics unless provided with specific instructions in the prompt. Think back to the first picture I showed you, where I inputted “Python developers hanging out”—not all of my Python developer friends look like or identify as white men! So let’s try something else:

If in the prompt I replace the term “Python” with “PyLadies,” which refers to an international Python mentorship group for marginalized genders that champions diversity, now we see women in the pictures. But the images still don’t reflect my experience at say, PyCon Ghana. What if I get even more specific?

Finally the images demonstrate better and more accurate representation. But as you can see, you have to be quite specific with your prompt for Stable Diffusion to generate something that’s representative in this way. This is concerning, as it perpetuates the issue of underrepresentation.

My honest answer to this question is “I don’t know.” To “fix” a model that has been trained through the vast efforts of many researchers is not easy. Some may suggest providing it with more diverse data, some may recommend fine-tuning the weights in the model. However, the first step is recognizing the problem and wanting to address it. We ML and AI enthusiasts must be conscious of these stereotypes and underrepresentation problems and the impact it can have if biased technology is widely adopted. Then together, we can work towards a solution.

Cheuk Ting Ho is a developer advocate at Anaconda. In her prior roles as a data scientist, she leveraged her advanced numerical and programming skills, particularly in Python. Cheuk contributes to multiple open-source libraries, such as Hypothesis and pandas, and is a frequent speaker at universities and conferences. She has organized conferences including EuroPython (of which she is a board member), PyData Global, and Pyjamas. In 2021, Cheuk became a Python Software Foundation fellow.

Talk to one of our experts to find solutions for your AI journey.