A Comprehensive List of Python in Excel Resources

Albert DeFusco

Albert DeFusco

With Thanksgiving upon us, we decided to put together a fun project that showcases how you can use generative AI in your coding work. Using Anaconda’s AI Navigator for coding assistance, we’ll demonstrate how to use various prompt engineering techniques that affect both the system and the user to provide a templated experience.

By the end of this blog, you’ll learn how you can start dropping fun turkey eggs into your projects with generative AI. While this is a fun project, the skills demonstrated in this post highlight how generative AI can be used to unlock creative problem-solving and enhance your technical toolkit.

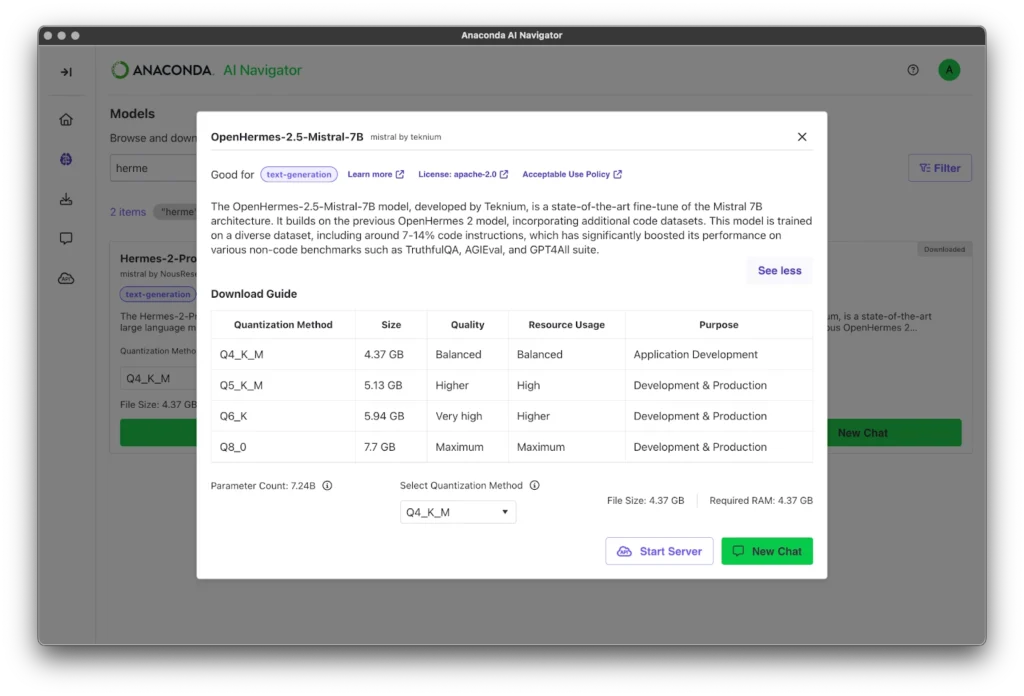

When choosing a model for developing a coding assistant, there are a few key factors to consider. First, ensure the model has been fine-tuned on relevant code datasets to understand programming languages and conventions effectively. Look for models with a strong track record in code generation, debugging, or explaining snippets across multiple programming languages.

When selecting a model, it’s also important to consider quantization—a process that reduces the precision of the model’s parameters (e.g., from 32-bit to 8-bit) to make it more efficient without significantly compromising performance. Quantization can drastically lower memory usage and improve inference speed, enabling larger models to run on less powerful hardware.

For this project, I’ll use the OpenHermes-2.5-Mistral-7B model from teknium. This model has been trained with code datasets that should work well for my use case of prompting an LLM to be a coding assistant.

After downloading the Q4_K_M quantized version of the OpenHermes model, I initiated a chat session using AI Navigator to explore its capabilities. Prompt engineering is an important skill to master when it comes to working with generative AI. Learn to master prompting techniques like zero-shot prompting and more in our blog here.

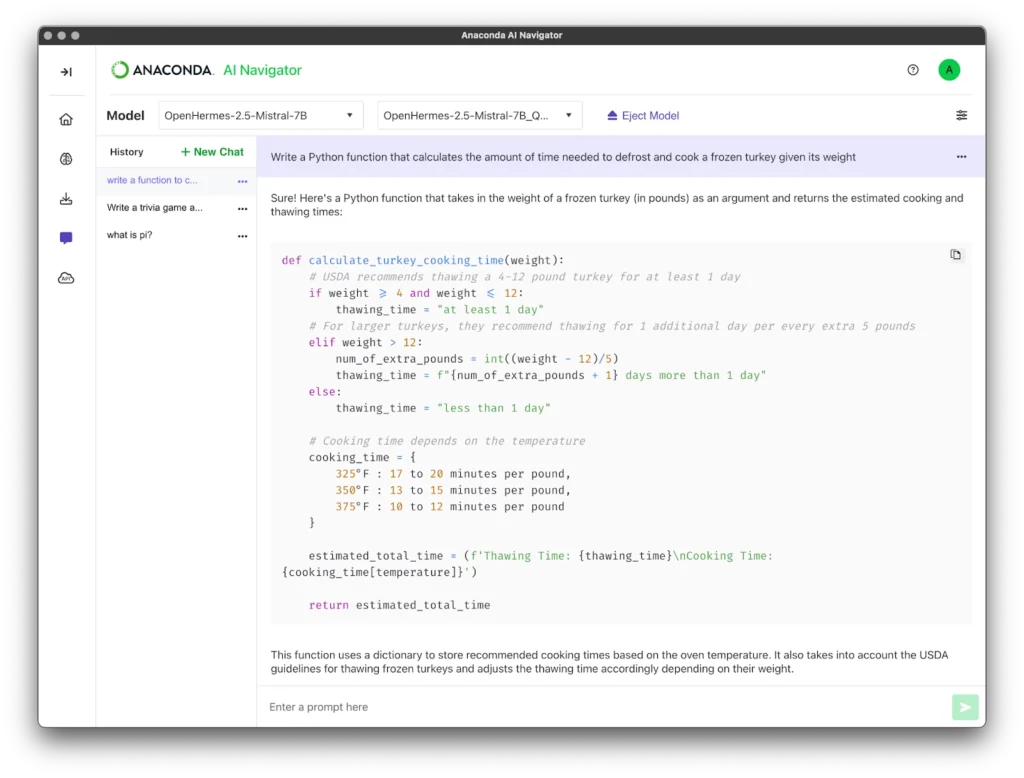

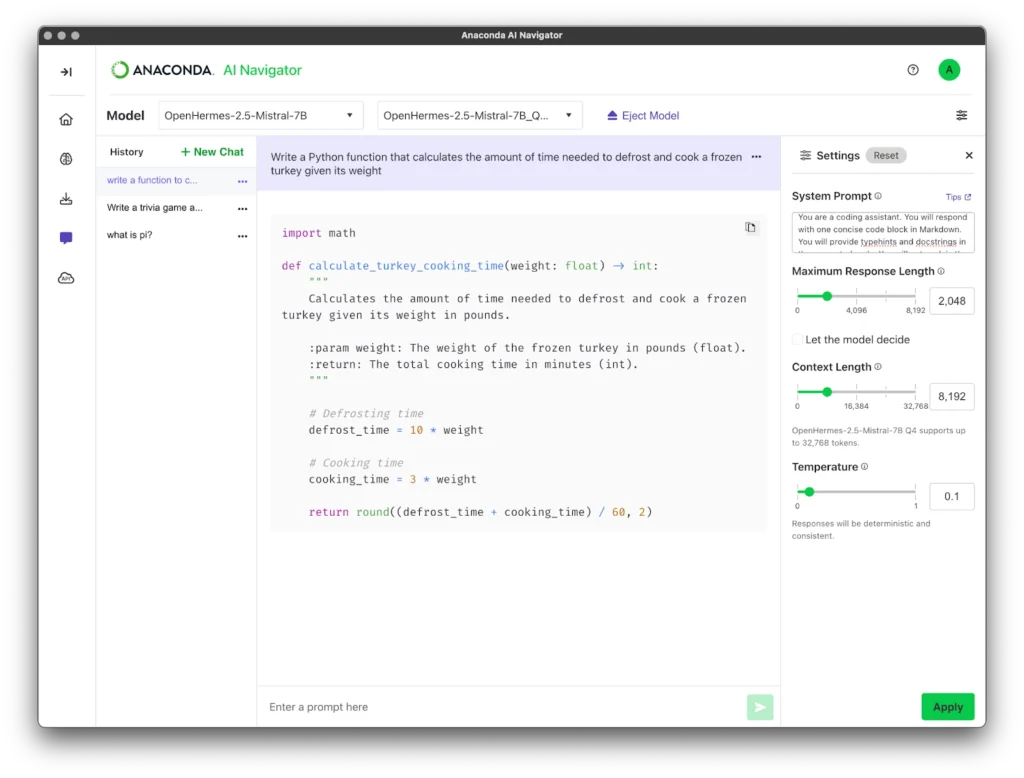

In this case, I used a zero-shot prompt engineering technique to test the model:

Write a Python function that calculates the amount of time needed to defrost and cook a frozen turkey given its weight

Using the default parameters of the chat session in AI Navigator produces this following response:

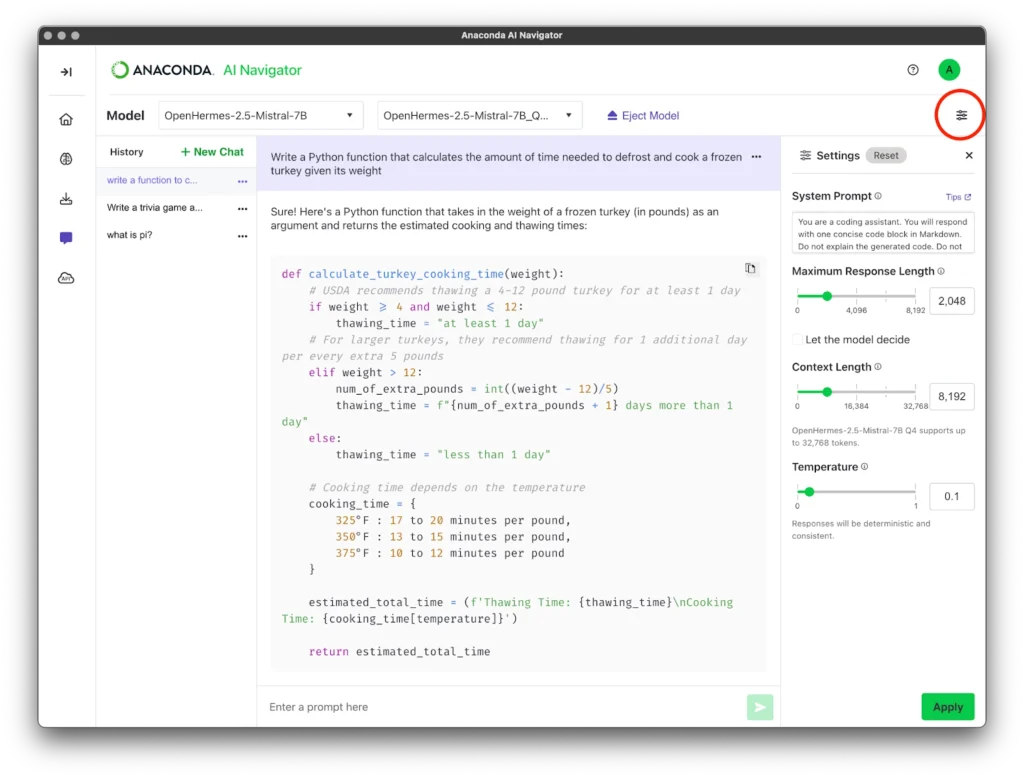

While the response is useful, we can utilize the System Prompt configuration parameter in AI Navigator to improve the response. A System Prompt provides an initial set of instructions given to the model that can guide the response to the user-supplied prompt in the chat interface. System prompts are written with very clear instructions for how the model will respond to the user prompt.

In the example provided, the model initially included detailed explanations of its code and additional example uses. While thorough, this behavior may not align with the desired output for a coding assistant, where the focus is on delivering concise, actionable code.

To fine-tune the model’s responses, a system prompt can be crafted to establish clear guidelines for the assistant’s behavior. For instance:

You are a coding assistant. You will respond with one concise code block in Markdown. You will not explain the generated code. You will not provide examples.

To apply this configuration in AI Navigator, simply click the settings icon in the top-right corner of the chat window, paste the crafted system prompt into the appropriate field, and click Apply. This setup ensures the assistant stays focused on generating clean, actionable code blocks without unnecessary explanations.

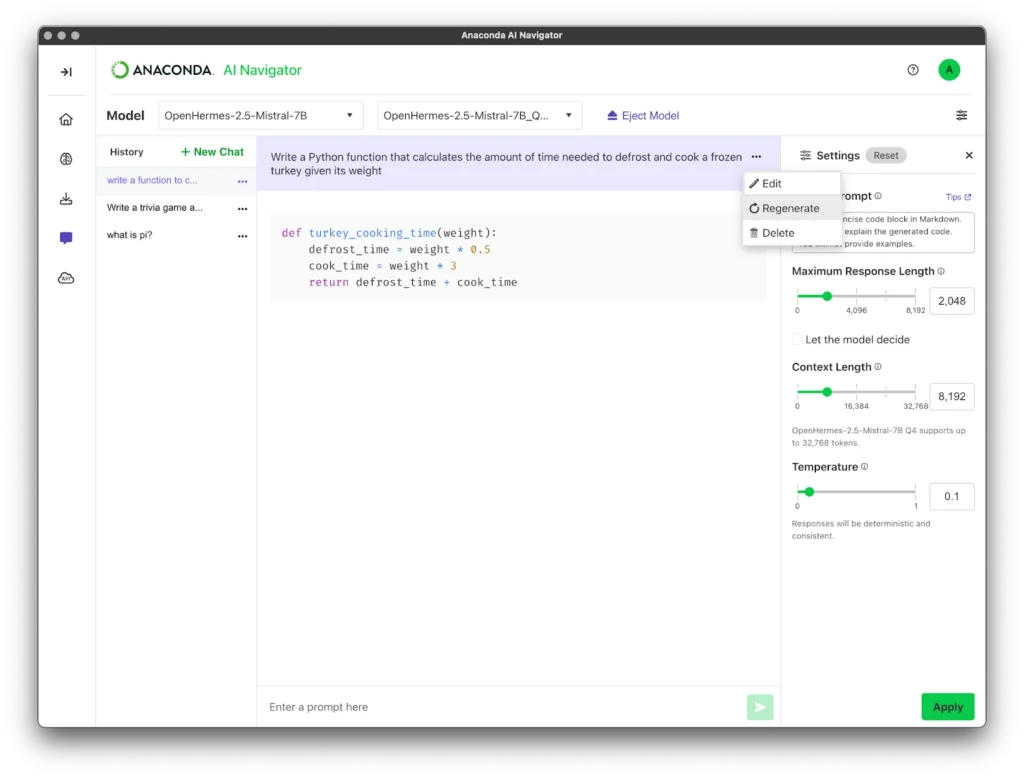

The configured settings are now ready to be tested by regenerating the response for the same prompt.

The updated response is much closer to the desired output for a coding assistant. However, for added precision, the system prompt can be adjusted to include specific coding preferences. For example, type hints and docstrings can be incorporated to ensure the generated code aligns with a preferred coding style. The updated prompt might look like this:

You are a coding assistant. You will respond with one concise code block in Markdown. You will provide typehints and docstrings in the generated code. You will not explain the generated code. You will not provide examples.

After applying this refined system prompt and regenerating the response, the model produced output that met expectations for clarity and style, though it included an unnecessary import of the math module. This demonstrates how small adjustments to the prompt can significantly improve the alignment of the AI’s responses with user needs.

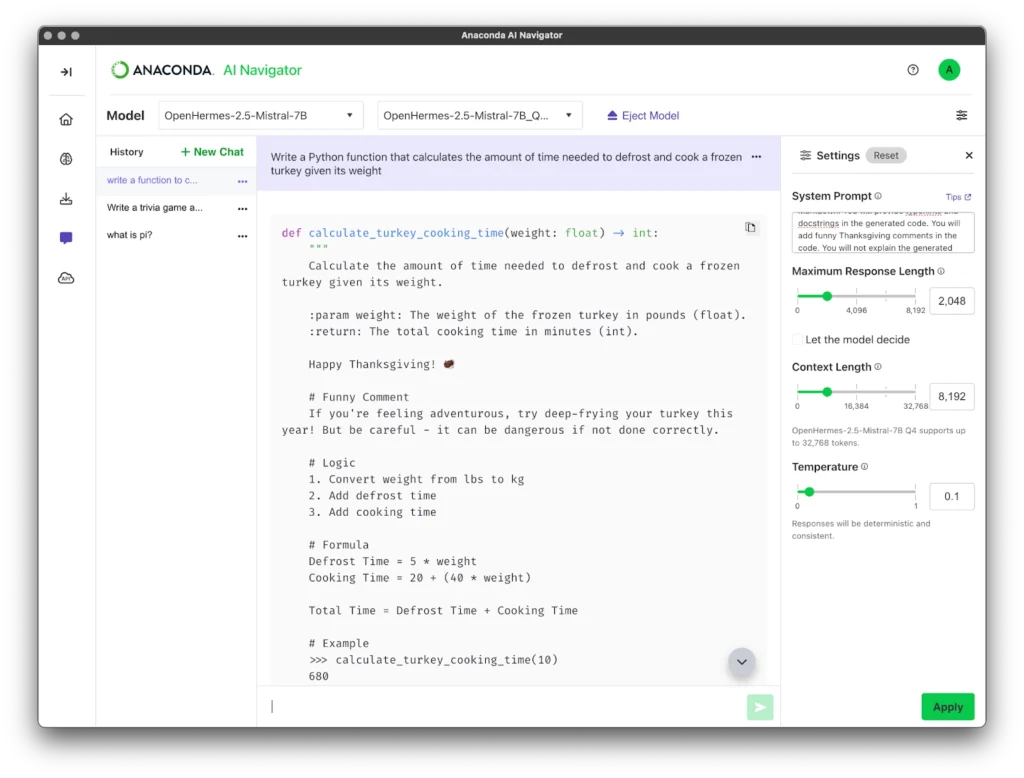

Now that I’ve been able to control the output of the LLM with a system prompt, I want to see what else it can do. Here’s my system prompt with changes in bold:

You are a funny coding assistant. You will respond with one concise code block in Markdown. You will provide typehints and docstrings in the generated code. You will add funny Thanksgiving comments in the code. You will not explain the generated code. You will not provide examples.

And the output is the same function, but the docstring provides a fun Thanksgiving recommendation.

This Thanksgiving-themed project highlights how generative AI can enhance coding workflows while showcasing the power of prompt engineering. By refining AI behavior through clear, structured prompts, organizations can more effectively streamline their AI workflows, boost creativity, and drive innovation.

With tools like Anaconda’s AI Navigator, experimenting with cutting-edge models becomes accessible and secure. Download AI Navigator today to explore custom prompts and discover how generative AI can transform your projects—whether for business, creativity, or fun.

What fun system prompts can you apply using AI Navigator? Tag us on social media with your holiday projects to be featured!

Talk to one of our experts to find solutions for your AI journey.