Anaconda Named a Leader in Data Science and Machine Learning Platforms by G2 Users

Adam Van Etten

4min

When standard navigation techniques (i.e. Google Maps) are down and time is of the essence, how does one optimally evacuate from (or position aid to) a hazardous locale? In this post I explore an option called Project Shackleton. In a disaster scenario (be it an earthquake or an invasion) where communications are unreliable, overhead imagery often provides the first glimpse into what is happening on the ground. Accordingly, Project Shackleton combines satellite/aerial imagery with advanced computer vision to extract both the current road network and precise positions of all vehicles within the area of interest.

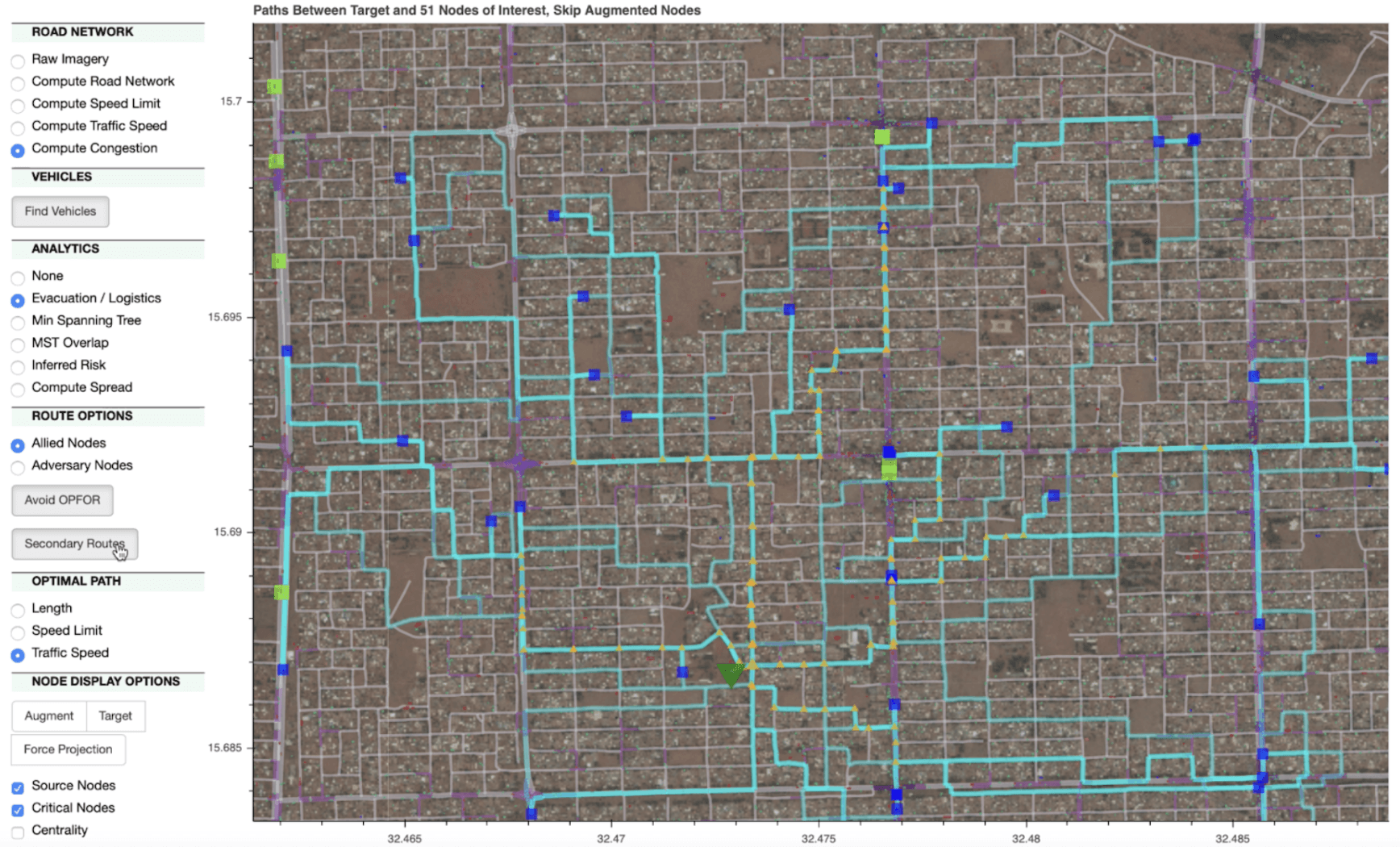

These predictions enable a host of graph theory analytics, which are incorporated into an interactive dashboard built atop Anaconda’s Bokeh interactive visualization library. This dashboard allows us to compute and display a number of evacuation and routing scenarios. In this post I explore these scenarios and provide details on the open-source codebase. An explainer video is also available for those who would like to see the code in action.

In many scenarios where reaction speed is critical and communication networks are inconsistent (e.g. natural disaster, war), satellite imagery frequently provides one of the few reliable data sources. For example, the frequent revisits of the Planet constellation have provided valuable insights into the war in Ukraine. Automated analytics with such imagery can therefore prove very valuable. In this post I focus on the rapid extraction of both vehicles and road networks based on overhead imagery, which allows a host of interesting problems to be tackled, such as congestion mitigation, optimized logistics, evacuation routing, etc.

A reliable proxy for human population density is crucial for effective response to natural disasters and humanitarian crises. Automobiles provide such a proxy. People tend to stay near their cars, so knowledge of where cars are located in real time is helpful in disaster response scenarios. In this project, we deploy the YOLTv5 codebase to rapidly identify and geolocate vehicles over large areas. Geolocations of all the vehicles in an area allow responders to prioritize response areas.

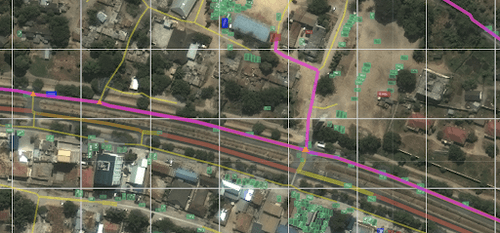

Yet vehicle detections really come into their own when combined with road network data. We use the CRESI framework to extract road networks with travel time estimates, thus permitting optimized routing. The CRESI codebase is able to extract roads with only imagery, so flooded areas or obstructed roadways will sever the CRESI road graph; this is crucial for post-disaster scenarios where existing road maps may be out of date and routes suggested by existing navigation services may be impassable or hazardous.

Placing the detected vehicles on the road graph enables a multitude of graph theory analytics to be employed (congestion, evacuation, intersection centrality, etc.). Of particular note, the test city selected below (Dar Es Salaam) is not represented in any of the training data for either CRESI or YOLTv5. The implication is that this methodology is quite robust and can be applied immediately to unseen geographies whenever a new need may arise.

We use the SpaceNet 5 image of Dar Es Salaam for our test imagery. Advanced computer vision algorithms will ideally be executed on a GPU, and we utilize the freely available Amazon SageMaker StudioLab for deep learning inference. Setting up a StudioLab environment to execute both CRESI and YOLTv5 is quite easy, and inference takes ~3 minutes for our 12-square-kilometer test region. Precise steps are detailed in this notebook.

The Shackleton dashboard creates a Bokeh server that displays the data and connects back to underlying Python libraries (such as NetworkX, OSMnx, and scikit-learn). While a number of options exist for spinning up interactive dashboards, there are advantages to using Bokeh. One is the fact that all plotting can be accomplished with the standard language of data science: Python; knowledge of HTML (or any other language) is unnecessary. Another advantage is that Bokeh servers are able to connect to underlying Python data science libraries, which enables real-time computation of arbitrarily complex quantities. To support plotting of large satellite images, we spin up a tile server courtesy of localtileserver in addition to the Bokeh server.

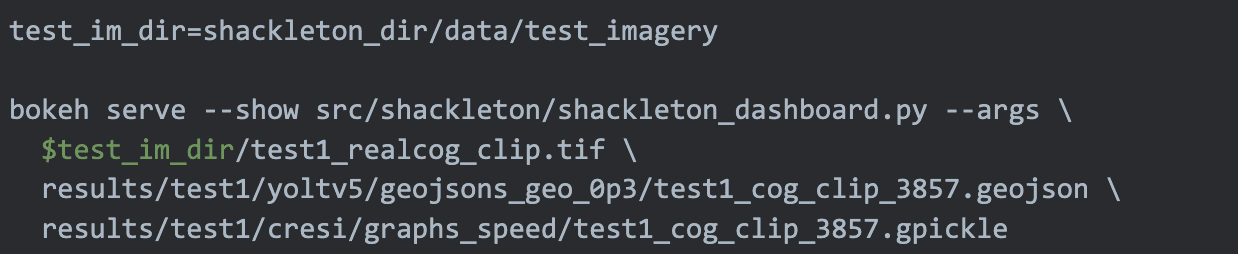

The dashboard can be fired up with a simple command, such as:

This will invoke the interactive dashboard, which will look something like the image below:

Figure 1. Sample view of the Shackleton dashboard.

Routing calculations are done on the fly, so any number of scenarios can be explored with the dashboard, such as:

Real-time road network status

Vehicle localization and classification

Optimized bulk evacuation or ingress

Congestion estimation and rerouting

Critical communications/logistics nodes

Inferred risk from hostile actors

Clicking on any node in the network will move the evacuation/ingress point to that node, which greatly expands the number of scenarios one can explore. Figure 2 shows a handful of the many possible use cases.

Figure 2. Example use of the dashboard.

In this blog I showed how to combine machine learning with graph theory in order to build a dashboard for exploring a multitude of transportation and logistics scenarios. Relying on nothing but overhead imagery, we are able to estimate congestion, determine bulk evacuation/ingress routes, mark critical nodes in the road network, as well as a host of other analytics. Our codebase is open source, with self-contained predictions and sample data enabling the dashboard to be spun up quite easily, and this explainer video illustrates how to use Shackleton.

A number of enhancements to Project Shackleton are possible, such as streamlining the dashboard (and efficiently plotting very large datasets) with HoloViz. Bespoke graph analytics modules are also easy to incorporate into the dashboard; for example if one needed to know how to evacuate while avoiding major highways this could be added to the dashboard. I encourage the interested reader to tackle such enhancements, or reach out to [email protected] with comments or suggestions.

Adam Van Etten is a machine learning researcher with a focus on remote sensing and computer vision. Adam helped found the SpaceNet initiative and ran the SpaceNet 3, 5, and 7 Challenges. Recent research focuses include semi-automated dataset generation and exploring the limitations and utility functions of machine learning techniques. Adam created Geodesic Labs in 2018 as a means to explore the interplay between computer vision and graph theory in a disaster response context.

This year, Anaconda sought to amplify the voices of some of its most active and cherished community members in a monthly blog series. If you’re a Maker who has been looking for a chance to tell your story, elaborate on a favorite project, educate your peers, and build your personal brand, consider submitting content to Anaconda. To access a wealth of educational data science resources and discussion threads, visit Anaconda Nucleus.

Talk to one of our experts to find solutions for your AI journey.