Substantial and impactful open-source innovation is at the heart of Anaconda’s efforts to provide tooling for developing and deploying secure Python solutions, faster. With the goal of capturing and communicating our teams’ many ongoing contributions to a wide variety of open-source projects, we are now providing regular roundups of related news items on our blog.

Highlights by Dev Group

Anaconda has many different teams working on open source, and each performs a wide variety of tasks. Below I will cover some of our core efforts and recent milestones. Please note that the split into bullets is merely for readability; in practice, many of us work across these divisions.

Dask and Data Access

Find, read, and write data in any format or location → here

- Dask-awkward has been rapidly scaling to super-massive compute operations from its original field of high-energy physics. We now ensure that only the columns that are actually needed are loaded, even when the compute graph is many thousands of layers big. This will soon mean that it will be possible to only load a subset of a JSON schema for vectorized, distributed processing, saving on memory storage for all of the leaves of the schema that would simply be discarded. This will be a real first for nested/structured data processing in Python.

- Intake is undergoing a massive internal rewrite! This will take a while, but the aim is to come up with a design that is both simpler and more flexible. With this, we will be ready for users to define their own data for upload to Anaconda.cloud and share with friends and colleagues or even the wider world. The effort was motivated by two main considerations:

- No one wants to write raw YAML just to encode their data dependencies. If we don’t want a separate class of data curators, we need to remove this barrier.

- Unlike when Intake was born, there are now many ways to read your data, even if you need cluster/distributed systems. So we are decoupling the “data” definition (e.g., “it is a CSV at this URL”) from arguments to various readers. Thus, we can define in one place how to read with Dask, Spark, Ray, Polars, DuckDB, etc., and allow the user to select which is appropriate for their daily work.

- Filesystem Spec (fsspec) has, as usual, been undergoing rapid development and release cycles. Of particular interest, the testing of bulk operations (upload/download/copy/delete) has received a lot of attention, and we now have a test suite ensuring consistency across many backends versus expected behavior. Next, we will be rewriting the file caching layer to be flexible and pluggable, so that users can choose how filenames are stored and when to delete old copies or refresh files because of a new upstream version.

- Kerchunk finally fully supports storing references in optimized Parquet format—now also in write mode. This means that you can scan many thousands of original data files to produce an aggregated “logical” dataset over the whole thing (great for parallel cloud-native access), while maintaining both low memory overhead and fast random-access speed to any part of the data. Next, we will be concentrating on indexing into compressed files (gzip, ZIP, bzip2 and zstd) and updating reference sets when new data files arrive but the other files have not changed.

Jupyter

In-browser interactive development environment for Python and other languages → here

- The team has released the 1.0.0 version of nbclassic, a package that allows the “classic” Jupyter Notebook (equivalent to Notebook 6.5) to be installed and used alongside JupyterLab or Notebook 7 in an environment. We’ve also put out a release of the jupyter-nbextensions-configurator, which fixes some compatibility issues with nbclassic.

- Notebook version 7 is in its second release candidate stage, and we have been helping with bug fixes and feature requests ahead of the next big release.

- We are excited about jupyter-collaboration and have been working on creating a stress test framework to identify synchronization bugs that emerge at scale.

- Lots of other work has been going on to improve Jupyter documentation as we try to make the extension developer experience better.

HoloViz

Interactive visualization and plotting for PyData → here

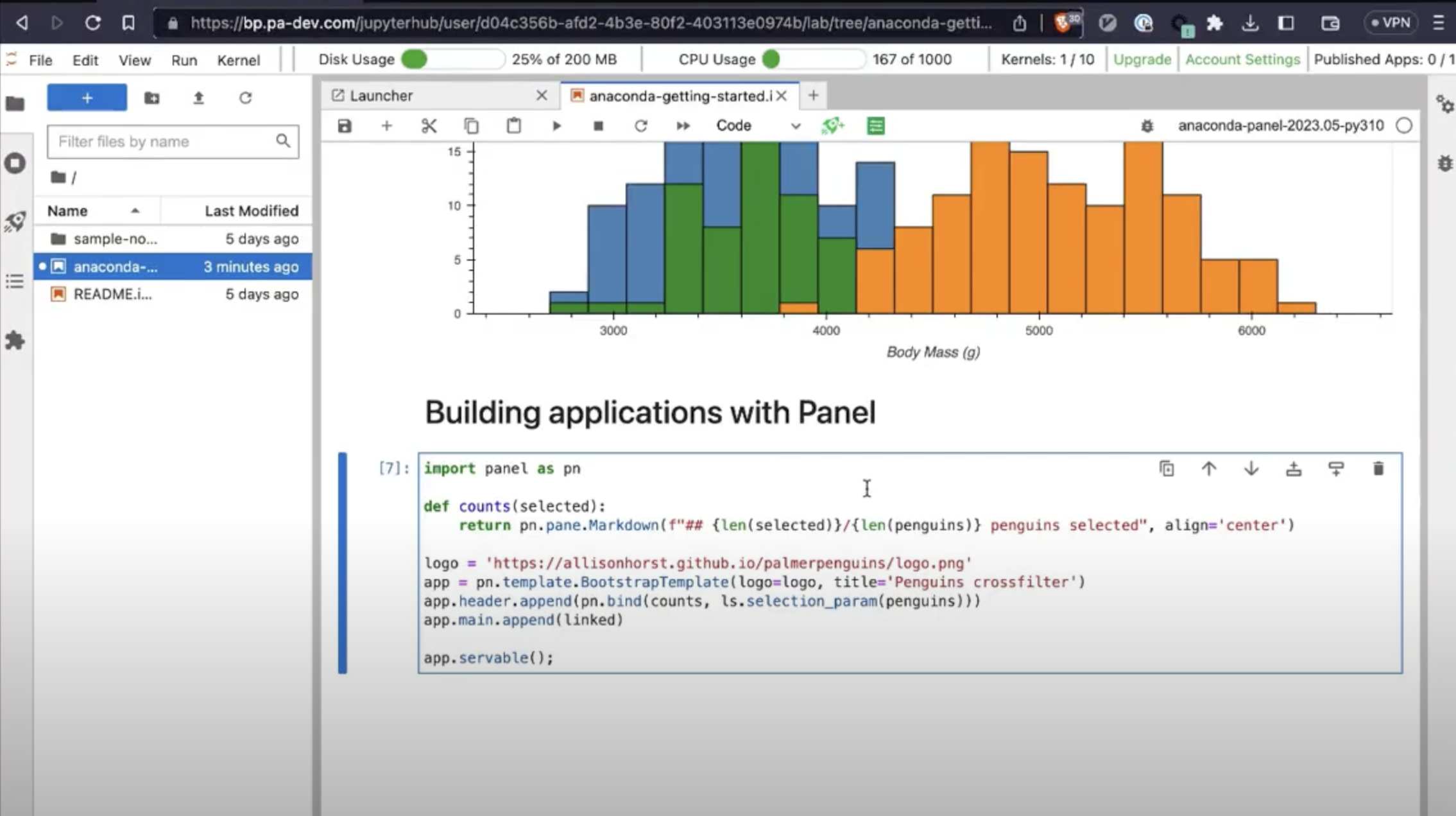

- Panel 1.0 and bugfix patches were released.

- Our data app contest is well underway, and we will soon be evaluating the entries. Thanks for all the interest from the community and excellent contributions so far!

Conda

The Python (and everything) package manager → here

- The conda team has officially launched conda.org! This website will be home for the entire conda community. It comes with its own blog page, with more articles you might find interesting. We are still very much actively developing it and welcome any contributions. This is part of the larger process of conda becoming a broader community with interaction standards and open stewardship.

- Conda-project is emerging as the successor to anaconda-project, offering a simple way to encapsulate code, notebooks/dashboards, environments, and run commands in a single folder.

BeeWare

Run Python apps on any platform, including mobile → here

- BeeWare has been undergoing a huge audit of all of the widget elements across all of the supported backends, with greatly expanded testing and documentation along the way, to ensure consistent behavior in all situations. We have almost completed this work, with a goal to be done early in Q3.

- The web backend has been consistently improved, particularly for Pyodide compatibility, and is catching up to the mobile and desktop implementations.

- Updates to cross-platform testing within Briefcase allow for assessing coverage before kicking off full CI on various backends.

- Watch our talks from PyCon:

Numba

Accelerate your Python numerical code with JIT compilation → here

- Numba 0.57.0 and 0.57.1 (along with llvmlite 0.40.0 and 0.40.1) were released. These were huge releases that added support for Python 3.11, NumPy 1.24, and LLVM 14.

- Following a ton of work refactoring the core internals of Numba, an alternative front end to the compiler chain is finally available, if experimental, for interested testers (see here). This change gives an intermediate code describing the loops and branch structure of the original Python, having taken the specifics of the Python bytecode out of the equation. It will (in the long run) provide a more stable representation for the rest of the Numba machinery to work from, be more approachable to outsiders, and even be useful for non-Numba use cases.

- Python 3.12 is coming quickly, and attention will shift to supporting it so that Numba can be used with this new Python release as soon as possible after it becomes publicly available. The above point should be very helpful in cranking this out!

Other

- We encourage you to register for an upcoming live training: Data Visualization with PyScript. Learn about the basic functions and applications of PyScript, how PyScript can be used to display and share data visualizations, and more.

Stay in Touch!

We welcome any feedback on the delivery of our OSS updates, so please don’t hesitate to to get in touch with us via your preferred project channel, social media, or Anaconda Cloud.

You may also be interested in some of our other recent software activities, covered on our blog:

See you next quarter!

About the Author

Martin Durant is a former astrophysicist with several years of scientific research experience. He has also worked in medical imaging, building AI/ML pipelines and a research platform. After a brief stint as a data scientist in ad-tech, Martin moved to Anaconda to work on PyData education. He now leads a number of open-source PyData projects, focussing on data access, formats, and parallel processing.