At Anaconda, we’ve developed a rigorous new approach to AI development called Evaluations Driven Development (EDD). By continuously testing AI models using real-world cases and user feedback, EDD enables us to create AI assistants that are reliable, relevant, and truly impactful for users.

Our Anaconda Assistant, an AI coding companion for data scientists, exemplifies the power of EDD. Trained on real code samples, errors, and fixes, it offers in-context suggestions and debugging help to supercharge your Python workflow. And thanks to EDD, it keeps getting smarter with each update.

We believe EDD is the future of AI development, ensuring that AI tools don’t just demo well, but deliver real value. If you’re excited by AI’s potential but wary of the hype, read on to learn how EDD works and why it’s a game-changer for building AI applications that make a difference.

The Anaconda Assistant: Your AI-Powered Data Science Sidekick

As a data scientist working with Python, you know the frustration of hitting roadblocks in your code. That’s where the Anaconda Assistant comes in.

Leveraging state-of-the-art language models trained on real-world Python code, errors, and solutions, the Assistant offers a range of features to streamline your workflow:

- Generating complex code snippets with clear explanations

- Suggesting improvements to code style and readability

- Providing in-context explanations for functions and modules

- Recommending data preprocessing techniques tailored to your project

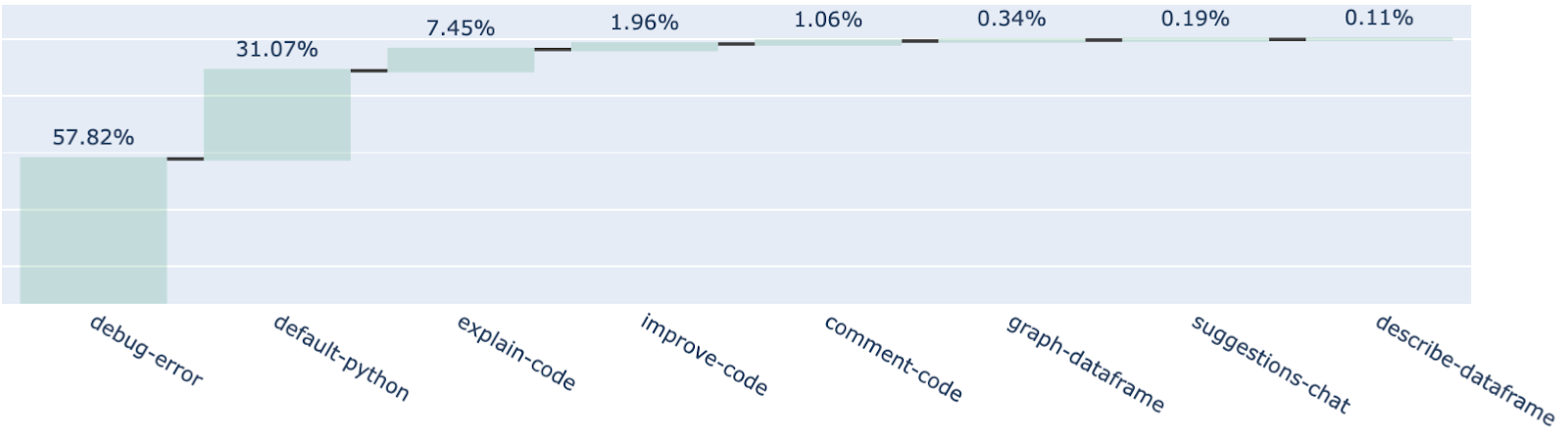

But the Assistant’s most popular feature by far is intelligent debugging. Telemetry data shows that 60% of user interactions involve asking for help with pesky errors.

Just describe your error to the Assistant and within seconds, you’ll get a plain-English explanation of the problem and suggestions for fixing it. No more hours lost scouring Stack Overflow!

Best of all, the Assistant is always getting smarter thanks to our Evaluations Driven Development (EDD) process. Every interaction with users who have consented to data collection is an opportunity to refine the prompts and queries we use to elicit relevant, reliable support from the underlying language models.

In the next section, we’ll explore how EDD enables us to continuously improve Anaconda Assistant without the need for costly and time-consuming model retraining.

Our Approach: Evaluations Driven Development with “llm-eval”

Our Evaluations Driven Development (EDD) methodology, powered by our in-house “llm-eval” framework, consists of rigorously testing and refining the prompts and queries we use to elicit relevant, reliable outputs from the underlying language models. Rather than just optimizing for abstract benchmarks, we evaluate the Anaconda Assistant on its ability to handle the actual challenges faced by data scientists in their daily work.

This is where “llm-eval” comes in. It’s a comprehensive testing framework that allows us to simulate thousands of realistic user interactions and assess the Assistant’s responses across a wide range of scenarios, from debugging complex errors to generating readable, well-documented code.

Here’s a high-level overview of how it works:

- We start by defining key evaluation criteria, focusing on the areas that matter most to our users, such as the accuracy of error explanations and the clarity of code explanations.

- Next, we curate a diverse set of test cases spanning the most common tasks and challenges encountered by data scientists, from simple syntax errors to complex issues around data types and performance.

- We then run the Anaconda Assistant through each of these test cases and evaluate its performance against our criteria. “llm-eval” automates much of this process, allowing us to efficiently test the Assistant across a huge range of scenarios.

- Based on the results, we identify areas where the Assistant’s outputs could be improved and use those insights to refine our prompts, queries, and knowledge bases.

- Finally, we re-run our evaluations to verify that our refinements have led to meaningful improvements in the Assistant’s performance. And then the cycle begins anew, with each iteration driving the Assistant towards ever-greater levels of reliability and usefulness.

To make this a bit more concrete, let’s take a closer look at how we’ve applied EDD to one of the Anaconda Assistant’s most important capabilities: error handling.

Error Handling: A Case Study in EDD

Error handling is a critical aspect of the Assistant’s functionality, as data scientists rely on clear, actionable guidance when encountering bugs in their code. To assess the Assistant’s performance in this area, we used the “llm-eval” framework to systematically test its ability to diagnose and fix errors across a wide range of real-world debugging scenarios.

One particularly illuminating case study involved the following code, which raises a `ValueError` when an invalid age is passed to the `create_person` function:

class Person:

def __init__(self, name, age):

self.name = name

self.age = age

def __str__(self):

return f"Name: {self.name}, Age: {self.age}"

def create_person(name, age):

if age < 0:

raise ValueError("Age cannot be negative.")

return Person(name, age)

def print_person_details(person):

print(f"Person Details: {person}")

# Example usage

people = [

create_person("Alice", 25),

create_person("Bob", -5), # Raises ValueError

create_person("Charlie", 30),

]

for person in people:

print_person_details(person)We provided the Assistant with this code snippet and the corresponding error message, and evaluated its responses based on accuracy: Does it correctly identify the root cause and provide a fix that resolves the issue?

To evaluate the Assistant’s performance, we tested its responses using different language models, versions, and temperature settings. In AI models like the one behind the Anaconda Assistant, the temperature setting controls the randomness and creativity of the generated text. Lower temperatures lead to more precise but conservative responses, while higher temperatures allow for greater diversity but potentially at the cost of coherence or factual accuracy. Finding the right balance is key to achieving reliable, high-quality results.

The initial evaluation results were eye-opening:

| Model | Temperature | Iterations | Success Rate |

| GPT-3.5-Turbo (v0125) | 0 | 500 | 12% |

| GPT-3.5-Turbo (v0125) | 1 | 500 | 13% |

| Mistral 7B Instruct v0.2 | 0 | 500 | 0% |

| Mistral 7B Instruct v0.2 | 1 | 500 | 2% |

Under these conditions, the Anaconda Assistant was only able to correctly identify the bug and provide a working solution in at most 13% of the test executions. However, rather than being discouraged by these results, we saw them as a valuable opportunity to leverage the insights gained from our “llm-eval” framework to drive meaningful improvements in the Assistant’s performance. By carefully crafting the prompts and queries used to elicit error explanations and fixes, and by iterating on these prompts based on the evaluation results, we believed we could significantly enhance the Assistant’s error handling capabilities. In the next section, we’ll explore how we are applying these prompt engineering techniques to transform the Assistant into a robust and reliable debugging companion.

Prompt Engineering and Evaluation

Prompt engineering is the process of carefully designing the text input (the “prompt”) given to an AI model to guide it towards producing the desired output. Just as a well-worded question can help a human give a more relevant and useful answer, an effectively engineered prompt can significantly improve the quality of an AI’s responses. This might involve techniques like providing examples, breaking down complex tasks into steps, or specifying format requirements. To improve the Anaconda Assistant’s accuracy in error handling, we employed several prompt engineering techniques that have proven effective in guiding language models towards more accurate and relevant responses:- Few-shot learning: By providing examples of previously explained errors and their fixes in the prompt, we aimed to guide the model towards generating more accurate error explanations and code corrections. These examples were carefully selected from a dataset of common Python errors and their corresponding fixes, ensuring that they were representative of the types of issues users might encounter.

- Chain-of-thought prompting: We structured the prompt to ask for an explanation of the error before requesting the fixed code. This approach encourages the model to reason about the problem step-by-step, potentially leading to more reliable solutions. By breaking down the problem-solving process into discrete steps, we hoped to mimic the way a human developer might approach debugging.

system:

You are an AI Assistant called 'Anaconda Assistant'. As a Jupyter Notebook expert, your main task is to help debug code and briefly explain errors. Ask before providing corrected code. If providing corrected code, only provide the corrected code, nothing else.

[...]

user:

```python

def fibonacci(n):

fib = [0, 1]

for i in range(2, n+1):

fib.append(fib[i] + fib[i-1])

return fib fibonacci(10)

```

<output>

IndexError Traceback

----> 1 fibonacci(10)

----> 4 fib.append(fib[i] + fib[i-1])

</output>

Explain this error.

assistant:

The error occurs because the code is trying to access an index that is not yet in the list. To fix this error, you need to change the indexing in the loop.

The error occurs in the following line:

```python

fib.append(fib[i-1] + fib[i-2])

```

Explanation:

The code tries to access `fib[i]` and `fib[i-1]` to calculate the next Fibonacci number. \nHowever, `fib[i]` is not yet in the list, causing the IndexError. By changing the line to `fib.append(fib[i-1] + fib[i-2])`, \nwe correctly access the two previous Fibonacci numbers to calculate the next one.

[...]

```python

{error_cell_code}

```

<output>

{error_output}

</output>

Explain this error.- The overall accuracy rate for explaining and fixing different types of errors

- Specific patterns and edge cases where the Assistant struggled (e.g., errors involving complex data structures or multiple interrelated files)

- The effectiveness of different prompt engineering techniques in improving accuracy

How It Works: Agentic Feedback Iteration

As part of our Evaluations Driven Development (EDD) framework, we are employing an innovative technique called Agentic Feedback Iteration to further refine the prompts and queries used in the Anaconda Assistant. This process leverages the advanced capabilities of large language models to provide targeted feedback and suggestions for improvement based on the evaluation results.

Here’s how it works:

- We feed the evaluation results, including the original prompts, queries, generated responses, and accuracy metrics, into a language model.

- The model analyzes this data and provides specific suggestions for how to modify the prompts and queries to address the identified weaknesses and edge cases. For example, it might recommend adding more context to help the Assistant better understand the user’s intent, or adjusting the format to prioritize certain types of responses.

- We incorporate the model’s suggestions into the prompts and queries, and re-run the evaluation process to measure the impact on accuracy.

- We repeat this iterative process, with the model providing further rounds of feedback and refinement, until we achieve a significant increase in response accuracy.

By leveraging the advanced language understanding and generation capabilities of large language models, Agentic Feedback Iteration allows us to rapidly optimize the prompts and queries in a data-driven, targeted manner.

The specific changes made to the prompts and queries through this process directly address the key challenges and opportunities identified in our initial evaluations. For example:

User prompt modification:

- Original: “Explain this error”

- Updated: “How can I fix this error?”

System prompt changes:

- Original: “You are an AI Assistant called ‘Anaconda Assistant’. As a Jupyter Notebook expert, your main task is to help debug code and briefly explain errors. Ask before providing corrected code. If providing corrected code, only provide the corrected code, nothing else.”

- Updated: “As the ‘Anaconda Assistant’, you are a Jupyter Notebook expert tasked with debugging Python code and explaining errors to users. Follow these guidelines when responding to queries: 1. Provide code snippets with their output errors. 2. Explain the reason for the error in simple terms. 3. Before providing a corrected version of the code, ask if permission is granted. 4. Provide the corrected code in a single Python code block that addresses the specific error mentioned. 5. When explaining errors or providing corrected code, include comments in the code to highlight changes and reasons for these changes.”

Through multiple rounds of Agentic Feedback Iteration, we are significantly improving the accuracy of the Anaconda Assistant’s responses, particularly on the most challenging and nuanced error scenarios. In the next section, we’ll dive into the detailed results of this optimization process and explore some of the most impressive improvements achieved.

Results

To assess the impact of our prompt engineering efforts and the Agentic Feedback Iteration process, we conducted a new round of evaluations using the refined prompts. We focused on the same error handling scenario, running it hundreds of times to check whether the generated code still included the original bug or provided a successful fix.

- OpenAI GPT-3.5-Turbo (0125) Temperature 0: 87% success rate (up from 12%)

- OpenAI GPT-3.5-Turbo (0125) Temperature 1: 63% success rate (up from 13%)

- Mistral 7B Instruct v0.2 – NVIDIA A10G – Temperature 0.1: 87% success rate (up from 0%)

- Mistral 7B Instruct v0.2 – NVIDIA A10G – Temperature 1: 100% success rate (up from 2%)

The results showed a remarkable improvement across all models and settings compared to our initial evaluations. The most significant gains were observed with the Mistral 7B model, which achieved a perfect 100% success rate when using a temperature setting of 1. This means that, under these conditions, the Anaconda Assistant was able to correctly identify the bug and generate a working solution in every single test execution.

These results provide compelling evidence for the power of Evaluations Driven Development and the transformative potential of advanced language models combined with rigorous testing, prompt engineering, and techniques like Agentic Feedback Iteration. By continuously refining our approach and expanding its applications, we believe we can unlock even greater levels of performance and versatility, not just for the Anaconda Assistant but for AI-powered tools across a wide range of domains.

In the next section, we’ll explore some of the exciting future directions for EDD and the Anaconda Assistant, and how you can get involved in shaping the future of AI-assisted data science.

What’s Next for Evaluation Driven Development in Anaconda

Our Evaluations Driven Development (EDD) process represents a paradigm shift that is revolutionizing how we build, test, and deploy AI-powered tools across industries. With EDD, we’re building trust, reliability, and unprecedented capabilities into the very fabric of AI development.

The success of EDD in dramatically improving the performance and reliability of the Anaconda Assistant is just the beginning. As we look to the future, we are excited to explore new opportunities to build upon this foundation and drive even greater innovation and impact.

Our top priorities for the future of EDD include:

- Expanding the “llm-eval” framework: By incorporating more complex, multi-step coding challenges and domain-specific evaluation criteria, we can assess the performance of our AI solutions with unprecedented depth and precision, identifying new opportunities for improvement and optimization.

- Collaborating with the open-source community: We are committed to making the “llm-eval” framework publicly available in the near future, empowering other organizations to adopt and build upon our Evaluations Driven Development approach.

- Integrating user feedback for continuous improvement: By analyzing usage patterns, feature requests, and performance ratings, we can prioritize improvements that directly address the needs and preferences of our users, ensuring that our solutions not only meet but exceed their expectations.

Whether you’re a data scientist, developer, or AI enthusiast, there are many ways to get involved and contribute to the future of EDD and the Anaconda Assistant. From trying out the Assistant in your own projects and providing feedback, to contributing to the development of the “llm-eval” framework once we release it. Your input and expertise are invaluable in shaping the future of AI-powered innovation.

Embracing the Future of AI-Driven Development

Imagine a future where AI-powered tools like the Anaconda Assistant are not just nice-to-haves, but indispensable partners in data science workflows, enabling users to focus on high-level problem-solving while AI handles the repetitive tasks. Where AI-driven code analysis and generation tools help developers write more reliable, efficient, and secure software.

This is the future that Evaluations Driven Development is meant to enable. By combining rigorous, data-driven testing with targeted prompt engineering and techniques like Agentic Feedback Iteration, we’re not just improving AI models—we aim to fundamentally change the way we develop and deploy AI.

The future of AI-driven development is bright, and with Evaluations Driven Development as our guiding light, we’re excited to see where this journey takes us. Let’s embrace this new paradigm together, and unlock the full potential of AI to transform our world for the better.