Artificial intelligence (AI) is undeniably and rapidly transforming life as we know it. Leading tech companies are investing heavily, and Google CEO Sundar Pichai has compared the impact of AI to electricity and fire. Organizations that have adopted AI to improve products and services are seeing significant financial returns on their investments. We are just beginning to see the promise of AI come to life.

However, realizing business value with AI remains an elusive target for many organizations. Tools are ever-changing, use cases are disparate and scattered across the enterprise, and it’s incredibly complex to deploy useful AI into production. Data science and engineering teams that want to apply machine learning and deep learning to solve business problems face a confusing array of platforms, libraries, and software.

Evaluating and making decisions about how to apply the latest tools and techniques to develop, deploy, and maintain high-performing models requires a cross-functional effort among people, strategic investments in technology, and constant recalibration of processes.

We’ve created this guide to be a handy reference about AI platforms, common use cases, and factors to consider when evaluating AI platforms. Please bookmark this page and share it with colleagues if you find it helpful.

Introduction

In this guide, we will cover AI platforms for open-source data science and machine learning.We will define and consider the pros and cons of AI platforms that can facilitate your development and deployment of data science and machine learning algorithms, models, and systems. We will explore common use cases among enterprise organizations and some of the open-source tools available to you as you seek to harness the power of AI at scale. Finally, we will share best practices when considering an AI platform, including questions to ask potential technology providers.

AI Platforms, Data Science, and Open Source

Let’s begin with the basics, to inform those who are learning about AI platforms, machine learning, and deep learning—and also to set the stage for how we’ll be thinking about tools and techniques throughout this guide.

What is an AI platform?

An artificial intelligence (AI) platform is an integrated set of technologies that allow people to develop, test, deploy, and refresh machine learning (ML) and deep learning models. AI applies a combination of tools and techniques that make it possible for machines to analyze data, make predictions, and take action faster and more accurately than humans can do manually.

Enterprise AI platforms enable organizations to build and maintain AI applications at scale. An AI platform can:

- Centralize data analysis and data science collaboration

- Streamline ML development and production workflows, which is sometimes referred to as ML operations or MLOps

- Facilitate collaboration among data science and engineering teams

- Automate some of the tasks involved in the process of developing AI systems

- Monitor AI models and systems in production

How can you use an AI platform?

There are many ways to prepare an AI platform for data science and engineering practitioners. Your approach will vary based on your organization’s financial resources, your team’s compute and data processing infrastructure, and your team’s expertise. In general, you have three options when it comes to creating an environment for teams to collaborate on building and deploying AI:

Choice 1: Buy an end-to-end AI platform

There are a few big businesses that dominate the end-to-end AI platform market. Google Cloud Platform (GCP), Microsoft Azure, and Amazon Web Services (AWS) are all leaders in the space, offering end-to-end solutions and tools, such as AWS Sagemaker, for organizations looking to implement AI into their operations. This can be an expensive option that can lock you into using only certain tools or technologies, so it’s important to evaluate AI platforms carefully for the specific value they offer.

Each platform has its own strengths and weaknesses, so it’s important to choose one that will fit your specific use cases and will integrate seamlessly with other technologies you have in place across your organization.

Benefits

You can start fast and use tools that have enterprise-ready support. Tools and software are updated often for better reliability, performance, and security.

Challenges

Tools may not be configured for your particular use cases, which will require planning for more engineering support at the start. As your project evolves and you recalibrate resources along the way, tools you chose before may not support new requirements.

Choice 2: Build your own AI platform

Many enterprise organizations choose to build their own AI platforms. The choice to build your own AI platform often comes down to whether your project leaders want elements of that AI technology stack to be built and owned by the organization.

That is what disruptors Uber and Netflix did; they have built their own MLOps and AI platforms by stringing together their own combination of open-source tools, proprietary models, and enterprise providers of cloud infrastructure or compute resources. These companies have set the standard for AI success by leveraging data science, open-source tools, and proprietary algorithms to disrupt legacy industries.

Benefits

This approach can give you and your team more control over your workflow, development process, and deployment strategies. It also allows you to respond quickly as business requirements change over time.

Challenges

You will use more time and expense at the start to develop your own platform and supporting capabilities. You also will be responsible for the ongoing expenses of maintaining the system.

Choice 3: Open-source software

Open-source software is a great choice for building, deploying, and maintaining AI applications. It allows you to choose the best tools for your specific use case and leverage the power and innovation of the crowd of practitioners who are contributing to open-source tools, libraries, and frameworks.

Anaconda is a popular choice among data scientists, because it allows them to build environments where they can import and access the best open-source tools available for doing science with data. It is a Python-native application with 30 million users who contribute to the innovation, security, and techniques of open-source data science software.

Anaconda can be an integral part of your AI platform, and it is geared specifically for data science and Python users who see value in the open-source opportunity. IT administrators like Anaconda because they can use it to centralize and secure the work of data science teams, which feed the development and production of machine learning models and systems. Microsoft and Snowflake have embedded Anaconda into their data science and ML products to make it easy to accelerate data science exploration and production deployments.

Benefits

This approach gives your practitioners access to the best tools available for your use case. Users can access repositories, projects, and code that can accelerate development. Security vulnerabilities in open-source tools and packages can be identified and verified earlier, using the power of the crowd in the open-source ecosystem.

Challenges

You will need an open-source mindset, which requires deep collaboration. You’ll also need a talented team with a clear understanding of the problem to be solved, with access to ongoing training and upskilling opportunities.

The Benefits of Open Source in Data Science and Machine Learning

Using free, open-source tools and libraries, you can quickly create powerful AI solutions with minimal effort. There are many benefits to using open-source software, including the ability to collaborate with others, to contribute to the open-source community, and to distribute the software with minimal restrictions, depending on the software license.

Python is the most popular open-source software tool available for data science and AI. It is a versatile language that can be used for data science, AI, machine learning, and deep learning. Python is so versatile because it has a vibrant and strong open science ecosystem, with a large community of developers who create libraries and tools that make it easier to use. R is another language specifically designed for statistical analysis and data visualization. It is also widely used in machine learning and deep learning.

Anyone can use these tools without having to pay for a license. This makes them accessible to a wide range of people, including students and researchers who might not be able to afford proprietary commercial tools.

The benefits of open-source software for data science and machine learning include:

- Low barriers to entry: low- to no-cost access to some of the best tools available

- Reliability, security, and speed: the power of the community

- Access to open-source tools and talent: the community that uses the tools

- Libraries of data and models for data science and ML

- Education in open source for data science and ML

Why are there so many AI solutions?

The recent explosive growth of AI, as evidenced by advances in generative AI including stable diffusion and ChatGPT, is largely due to a form of machine learning called deep learning. Advances in self-driving cars and smart assistants, for example, are due to significant improvements in deep learning models.

Deep learning is basically a new term for an old concept: neural networks, which are collections of mathematical operations performed on arrays of numbers. But the models generated by deep learning techniques are significantly more complex or “deep” than traditional neural networks, and also involve much greater amounts of data.

Over the last few years, the combination of massive data, open-source advances in deep neural networks, and powerful hardware and compute or processing speeds have led to breakthroughs in a number of areas, including image classification, speech transcription, and autonomous driving. These advances have brought about tremendous economic implications.

Data scientists who attempt to deploy models find themselves wearing several hats: data scientist, software developer, IT administrator, security officer. They struggle to build and wrap their model, serve their model, route traffic and handle load-balancing, and ensure all of this is done securely. However, to achieve business value with AI, models must be deployed. And model deployment and model management are difficult tasks to complete, let alone automate.

The open-source community has only begun to create tooling to ease this challenge in model development, training, and deployment. Much of this work has been done by researchers at large companies and teams at startup-turned-enterprise companies like Uber and Netflix.

To start, Uber’s data scientists could only train their models on their laptops, inhibiting their ability to scale model training to the data volumes and compute cycles required to build powerful models. The team did not have a process for storing model versions, which meant data scientists wasted valuable time recreating the work of their colleagues. So Uber built and continues to evolve its own machine learning operations (MLOps) platform, Michelangelo.

While Uber and other companies have applied machine learning to innovate products, they did not start with a standard process for deploying their models into production, thereby severely limiting the potential business value from their work. Uber’s team reported having no established path to deploying a model into production—in most cases, the relevant engineering team had to create a custom serving container specific to the project.

To focus on building better models, data scientists at Google, Uber, Facebook, and other leading tech companies built their own AI platforms. These platforms automate the supporting infrastructure—known as the glue code—that is required to build, train, and deploy AI at scale.

AI Use Cases By Industry

Explore concrete examples of where these advances are being applied in industry today.

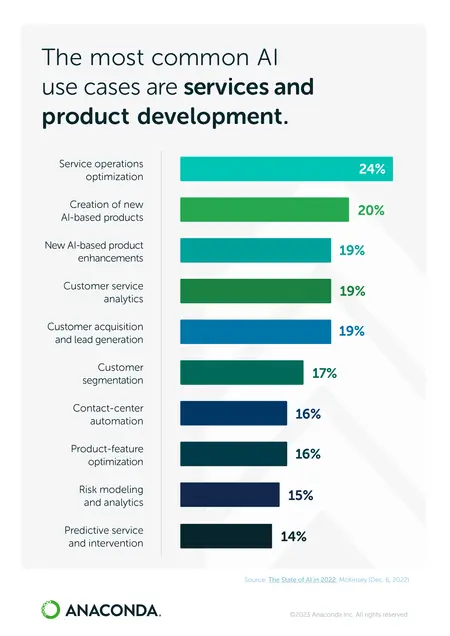

AI adoption and investments are on the rise, and organizations applying AI are generating substantial returns, according to a McKinsey survey and report on The State of AI in 2022. Common AI use cases include services and product development, with other AI capabilities being added to the list all the time.

Furthermore, while AI can represent a huge opportunity for your organization, keep in mind that it also represents a huge opportunity for your competitors—including organizations you might not be competing with today but soon will be, due to their advances in applying AI. The message is clear: organizations must adopt AI now or risk getting left behind.

The International Data Corporation (IDC) predicts that the global AI market will grow to USD $900 billion by 2026. IDC’s report forecasts 18.6% compound annual growth. Deep learning has achieved amazing breakthroughs in image classification, speech recognition, handwriting transcription, and text-to-speech conversion, among other areas.

Let’s explore some concrete examples where these advances are being applied in industry today.

Banking

Banks have been creating business value with AI for years now by transforming how customers interact with them, especially remotely. For some consumers, using mobile banking applications were among their first interactions with AI: banks use computer vision to detect check fraud in mobile check deposits, where the consumer takes a photograph of both sides of a check to deposit the funds into their account.

McKinsey research dated May 2021 estimates AI technologies for global banking could create up to USD $1 trillion of additional value each year, considering how AI can lower costs, reduce errors, and create new opportunities for banking organizations.

Here are common AI use cases in banking:

- Fraud detection: The most common application of machine learning is fraud detection. Fraud detection algorithms can be used to parse multiple data points from thousands of transaction records in seconds, such as cardholder identification data, where the card was issued, time the transaction took place, transaction location, and transaction amount.

To implement a fraud detection model, multiple accurately tagged instances of fraud should already exist in a data set to properly train the models. Once the model detects an anomaly among transaction data, a notification system can be programmed to alert fraud detection services the moment the model identifies a suspicious transaction.

Fraud detection is a type of anomaly detection algorithm. These algorithms can also be applied to data sets in other areas of the company to serve different purposes, such as network intrusion detection. This is one reason why some companies find more value in investing in an enterprise data science platform, rather than purchasing out-of-the-box models or pointed analytic solutions.

Credit scoring: Banks also rely on AI to establish accurate credit scoring. By implementing credit scoring algorithms, financial institutions do not have to rely on generic scores from the top three score reporting firms in the US. Many lending institutions see the benefit in developing custom credit-scoring models that utilize the institution’s own customer activity data, rather than generic scores from the top three score reporting agencies in the US, to better predict the risk or opportunity of extending a new line of credit. By doing so, they can reduce delinquency costs that come from loan write-offs, delayed income from interest, and the servicing cost of trying to collect late payments. Customers can be re-evaluated in real time. Machine learning (predictive) algorithms are used to update these scores as new data rolls in, ensuring they are using the most up-to-date information. It relies on data from past loans, granted there is enough data from both good and bad loans to train them effectively.

- Credit risk analysis: These predictive algorithms can also be utilized at the macro level to assess risk and predict market movement. Financial institutions use credit risk analysis models to determine the probability of default of a potential borrower. The models provide information on the level of a borrower’s credit risk at any particular time. If the lender fails to detect the credit risk in advance, it exposes them to the risk of default and loss of funds. Lenders rely on the validation provided by credit risk analysis models to make key lending decisions on whether or not to extend credit to the borrower and the credit to be charged.

Other common AI use cases in banking include:

- Account risk analysis

- Credit-line adjustment approvals

- Customer segmentation

- Personalized offers

- Strategic pricing models

Ecommerce

Amazon was among the first to introduce consumers to AI in ecommerce but it looked more like magic at that time, coming from a new, online seller of books. In 2003, Amazon’s team of researchers issued a paper about their use of a recommendation algorithm called collaborative filtering to predict a customer’s preferences, initially, using other shoppers’ preferences.

Amazon’s AI team learned that analyzing purchase history at the product level yielded better recommendations than results at the customer level. This was groundbreaking work, and since then, ecommerce companies have expanded their use of AI significantly.

Common AI use cases in ecommerce include:

- Product recommendations: Predictive analytics power advanced recommendation systems that analyze the historical purchases of website and app visitors to recommend more products. The models that run these systems are based on choices of similar users who use the same kinds of products or provide similar customer ratings.

- Product-shipping models: Predictive analytics is also used in the form of an anticipatory shipping model. They predict the products and pattern of most likely purchased products by customer and ensure those items are stocked in the nearest warehouse.

- Price optimization: Ecommerce also uses AI for price optimization, providing discounts on popular items and earning profits on less popular items. Fraud detection is another area, by using algorithms to detect fraud sellers or fraudulent purchases.

Other common AI use cases in ecommerce include:

- A/B testing

- Chatbots and virtual assistants

- Churn prediction

- Customer retargeting

- Demand forecasting

- Dynamic pricing

- Fraud detection

- Frontline worker scheduling and enablement

- Image processing

- Personalization

- Routing optimization

- Sales process improvements

- Website search engines

Energy

Energy management organizations are applying AI at a growing rate; the global market for AI in energy is projected to grow 21% from 2022 to 2030, according to Research and Markets research published in August 2022. Energy AI use cases are growing along with global energy production and consumption, with organizations using AI to improve energy efficiency, grid stability, and smart energy solutions.

Common AI use cases in energy include:

- Anomaly detection

- Demand forecasting

- Digital twins (simulations)

- Efficient energy storage

- Front-end engineering and design (FEED) automation

- Inventory management

- Logistic optimization

- Market pricing

- New-material discovery

- Predictive maintenance

- Production optimization

- Security

- Smart grids and microgrids

- Storage efficiency

- Usage forecasting

Finance

Finance organizations have been applying machine learning to surface insights, make critical decisions more quickly, and automate high-volume, manual tasks associated with trading in public markets.

Traders are bullish on AI. In a JPMorgan Chase & Co. survey published in February 2023, traders shared how they say AI and machine learning will have the largest impact on financial markets in the years to come. More than half of 835 respondents, who were institutional and professional traders, expect AI to have a significant impact on trading over the next three years. That’s up from about 25% in 2022.

Common AI use cases in finance include:

- Advanced analytics: Hedge funds have invested heavily in machine learning and other advanced analytic techniques, as they are constantly searching for new sources of information to make better trading decisions. Powerful quantitative models serve as core pillars of the hedge business and it’s no surprise that funds are early adopters of deep learning.

With so much money at stake, hedge funds increasingly are turning to “alternative data” to generate leading indicators of market trends. For example, they can input satellite images into GPU-accelerated neural networks that will estimate everything from the number of ships in a port to the amount of crops growing in a field. By using deep learning techniques to generate higher quality inputs, they can improve the outputs of their existing quantitative models.

- Contract processing: Natural language processing (NLP) is used in the finance industry for processing contracts. By applying NLP models to read and parse contracts, it can significantly reduce hours of redundant labor. For example, JPMorgan developed such a text-mining solution they refer to as COIN (Contract Intelligence). COIN helps analyze commercial loan contracts by parsing the document for certain words and phrases, saving the company 360,000 hours per year.

- Customer communications: Applying NLP models to customer communications on social media, phone transcripts, and customer service chat platforms allows financial institutions to categorize customer feedback and gauge sentiment to better understand their customers. AI provides the ability to analyze comments for sentiments that signal intentions, identify patterns to suggest areas for improvement, or flag issues before they affect a large number of customers.

Other common AI use cases in finance include:

- Rate-of-return analysis

- Portfolio management

- Market and trading risk

- Market price simulation

Government

Public agencies have an advantage over organizations in the private sector: a remarkable collection of data with high accuracy and a mandate to use it to provide better services to citizens. The primary challenge government agencies face in applying AI is keeping data secure. Some data is protected by law, such as individuals’ health and finance information. Nations and states must keep infrastructure and military data secure from exposures and risks that can be associated with AI solutions deployed at scale.

Multi-cloud strategies and security in the software development process (DevSecOps) and AI are all challenges that governments are facing today, according to a survey of business and IT leaders by Market Connections and Science Applications International Corporation (SAIC), in a report published in January 2023.

Common AI use cases in government include:

- Emergency response: The capability to incorporate data from multiple sources gives a significant advantage to local governments and authorities in their emergency response capabilities. Real-time analytics help support immediate decisions in stressful situations. Control over multiple communication channels, use of smart tools that recognize possible threats, and the ability to send alarms give local authorities the chance to warn citizens and advise them about further actions.

Other common use cases in government include:

- At-risk population support

- Benefits administration

- Climate analysis

- Criminal detection

- Digital transformation

- Economic analysis

- Equipment monitoring

- Fraud detection

- Health predictions

- Military support

- Personnel readiness

- Security threats

- Services modernization

- Trade surveillance

- Weapons innovation

Healthcare

A highly regulated area of service provision, healthcare has been forever changed by the introduction of AI applications that can identify medical issues faster, and often with greater accuracy, than people can. AI-assisted technologies are making it possible to improve diagnostic, descriptive, prescriptive, and predictive analytics to forecast individuals’ diagnostic outcomes.

AI adoption could lead to savings between 5% and 10%, an annual savings of $200 billion to $360 billion, according to researchers from Harvard and McKinsey in a January 2023 paper. The estimates take into consideration AI use cases for current technologies that will be available in the next five years.

Common AI use cases in healthcare include:

- Disease detection and diagnosis: Visual data processing helps radiologists read images faster for diagnoses, such as tumor detection. Radiologists’ workloads have increased significantly in recent years. Some studies found that the average radiologist must interpret an image every 3-4 seconds to meet demand.

Researchers have developed deep learning algorithms trained on previously captured radiographic images to recognize the early development of tumors in the lungs, breasts, brain, and other areas. Algorithms can be trained to recognize complex patterns in radiographic imaging data.

One early breast cancer detection tool developed by the Houston Methodist Research Institute interprets mammograms with 99% accuracy and decreases the need for biopsies. It also provides diagnostic information 30 times faster than a human. This leads to better patient care and helps radiologists be better at their jobs.

AI is also used for skin cancer diagnosis. Several researchers have used convolutional neural networks (CNNs) to develop machine learning models for skin cancer detection with 87-95% accuracy using TensorFlow, scikit-learn, Keras, and other open-source tools. In comparison, dermatologists have a 65% to 85% accuracy rate in detecting melanomas. In addition to skin cancer diagnosis, researchers are also using CNNs to develop tools for diagnosing tuberculosis, heart disease, Alzheimer’s disease, and other illnesses.

Other common use cases in healthcare include:

- Care delivery

- Chronic care management

- Clinical decision support

- Data management

- Digital pathology

- Disease forecasting

- Disease research and treatment

- Drug development

- Emergency dispatch optimization

- Genetic medicine

- Healthcare equity improvements

- Medical imaging analysis

- Patient self-care and wellness

- Telemedicine capabilities

Insurance

The insurance industry has a long quantitative tradition; however, this heavily regulated, risk-averse field has not had the same focus on data science and machine learning as sister industries banking and finance. Yet, insurance organizations have been shifting from a piecemeal approach to technology, transforming system by system, to initiatives led by line-of-business and department heads who are collaborating with chief information officers (CIOs) and chief technology officers (CTOs).

In its 2023 Insurance Outlook, the Deloitte Center for Financial Services urges insurance organizations to focus technology strategies and investments on differentiating insurers in customer segmentation, product support, and value-added services.

Common AI use cases in insurance include:

- Underwriting assessments: The condition of a home’s roof is critical to accurately pricing coverage. Traditionally, many insurance companies rely on homeowner-reported roof age to assess roof condition, an approach that is obviously subject to error. But with deep learning, insurance companies can use photographs of a roof to create a deep learning model that will provide a much more accurate representation of the roof’s quality. This enables insurance companies to reduce home insurance risk.

- Claims adjustment: Insurance companies also are using image classification techniques to make the work of insurance adjusters faster and more accurate. Rather than relying on an insurance adjuster to read the odometer of a car that has been in an accident, a deep learning model can ingest a photograph of the odometer and determine the correct reading.

Again, it’s important to note that these companies are not throwing away their existing models in favor of entirely new approaches. Instead, they are leveraging new AI techniques to improve the inputs to their models.

Other common AI use cases in insurance include:

- Personalized offers

- Strategic pricing models

- Customer segmentation

- Property analysis

Manufacturing

For years, leaders in manufacturing have been applying machine learning to optimize safety, product quality, and large-scale delivery of goods. Opportunities to apply AI in manufacturing are seemingly countless, because the industry relies heavily on both hardware and software to deliver products.

Edge AI, which relies on sensors in the field to deliver data to platforms that organize and analyze data, is a common use case in manufacturing. This is also referred to as IoT, or the Internet of Things. Industry experts have touted another approach, called adaptive AI, as key to navigating data challenges in the cloud and on the edge.

Top AI use cases in manufacturing have focused on predictive models to forecast critical factors related to supply chain, maintenance, logistics, and inventory, among other areas. AI can deliver outsized benefits, and for manufacturers, that can mean lower costs, faster delivery, and higher quality.

Common AI use cases in manufacturing include:

- Quality control: Image recognition and anomaly detection are types of machine learning algorithms that can quickly detect and eliminate faulty parts before they get into the vehicle manufacturing workflow. Parts manufacturers can capture images of each component as it comes off the assembly line, and automatically run those images through a machine learning model to identify any flaws.

Highly accurate anomaly detection algorithms can detect issues down to a fraction of a millimeter. Predictive analytics can be used to evaluate whether a flawed part can be reworked or needs to be scrapped. Eliminating or re-working faulty parts at this point is far less costly than discovering and having to fix them later. It saves on more expensive issues down the line in manufacturing and reduces the risk of costly recalls. It also helps ensure customer safety, satisfaction, and retention.

- Supply chain optimization: Throughout the supply chain, analytical models are used to identify demand levels for different marketing strategies, sale prices, locations and many other data points. Ultimately, this predictive analysis dictates the inventory levels needed at different facilities. Data scientists constantly test different scenarios to ensure ideal inventory levels and improve brand reputation while minimizing unnecessary holding costs.

Optimization models help guide the exact flow of inventory from manufacturer to distribution centers and ultimately to customer-facing storefronts. Machine learning is helping parts and vehicle manufacturers and their logistics partners operate more efficiently and profitably, while enhancing customer experience and brand reputation.

Other common AI use cases in manufacturing include:

- Digital twins (simulations)

- Edge AI

- Energy management

- Generative design

- Inventory management

- Just-in-time logistics

- Market analysis

- Predictive maintenance

- Predictive yield

- Price forecasting

- Process optimization

- Production optimization

- Quality assurance

- Robotics

- Root-cause analysis

Retail

The retail industry has taken its share of hits in the last few years, with the COVID-19 pandemic forcing retailers to shutter their doors and adapt to contactless shopping, service, and delivery. Those that were able to adapt quickly took retail to new heights of customer-focused service that, for many brands, strengthened their relationships with customers.

Big-box retailers Target and Walmart rolled out drive-up delivery and buy-online-pick-up-in-store (BOPIS) services, and some retailers, like Lowe’s Home Improvement, did it in a hurry to meet customer demand when the pandemic forced closures. Then came Russia’s war on Ukraine, and along with it, supply-chain challenges squeezed retailers’ ability to stock inventory and thus, bottom lines, for retailers large and small.

AI provides incredible opportunities for retailers, among them: a deeper understanding of their customers and the personalization capabilities to engage their most valuable customers.

Common AI use cases in retail include:

- Customized services: Olay, the multi-billion dollar skin care brand, launched a “Skin Advisor” application using neural networks in 2016 and doubled its sales conversion rates. Prospective customers submit a photograph, and the model returns a customized skin assessment and suggests corrective products for the customer to purchase.

While this might seem like a novelty product, it has had a significant financial impact. As a result of the application, average basket sizes, or the amount of goods a customer purchases at one time, went up 40% and conversion rates doubled. By harnessing AI, Olay offered a personalized shopping experience, and customers responded with buying more products.

Other common AI use cases in retail include:

- Customer segmentation

- Demand forecasting

- Delivery scheduling and route optimization

- Inventory optimization

- Planogram creation

- Product recommendations

- Supply chain optimization

- Workforce scheduling and management

7 Capabilities to Look for in an AI Platform

Success with AI comes down to an organization’s ability to build machine learning models at scale and rapidly deploy them. These are key capabilities to look for in your AI platform to ensure you can deploy more models into production, faster.

1. Automation

Automation is a critical accelerant for scale and speed across the data science lifecycle. Once teams identify a successful process, technique, or framework, they can automate it and recalibrate automation as necessary based on the accuracy of outputs and outcomes.

The complexity of developing and deploying models can discourage iteration. But it is imperative that teams continue to revisit and refresh models as there are changes in ground truth, or the conditions at the points where the model is being deployed and actions are being taken in real life, based on the model’s predictions.

Look for an AI platform that applies automation strategically in ways that make it easier for your team to accelerate the automation of proven models with consistently solid performance. It should be easy to integrate the latest and best tools for your use case into your data science and machine learning environments.

2. IT Enablement and Governance

Harnessing the power of open-source tools for machine learning includes maintaining control over the entire ML pipeline. IT administrators must be able to provision accounts based on job or role, track users, and make it easier for practitioners to share and collaborate on their projects. IT organizations need full control over their open-source supply chain, including the ability to track entire project lineage from packages to source code to deployment logs.

This approach gives data scientists control over model lineage and allows for reproducibility of successful models. It allows IT administrators to show that practitioners are using approved packages, have access to the compute resources they need, and are in compliance with enterprise IT and security policies or regulations.

Look for an AI platform that enables your IT administrators to control and govern the software supply chain, giving them the tools they need to manage user access and provide details for audits.

3. Scale

The importance of scale in ML model training and production cannot be overstated. It’s not enough to build and train models on a laptop with a subset of data. Data scientists must scale their model training to build powerful models, which means they need centralized workflows that allow them to design and build analytics and machine learning models, collaborate with other practitioners, and track their experiments and iterations.

For IT and security teams, scale requires the right tools to deploy those models into production securely, with confidence that they can quickly identify and mitigate security risks.

Thanks to GPUs and TPUs, this level of scale in model training is now economically feasible. But deploying these massive levels of compute requires significant supporting infrastructure. Thus, today’s challenge is not where to find the computing power, but how to manage an environment that supports it.

Look for an AI platform that can deploy as you like—online or offline—and can handle your organization’s requirements, use cases, and throughput as you scale your application of AI.

4. Security

For chief security information officers (CISO) and IT administrators, securing the open-source software supply chain is paramount. Data scientists commonly use open-source packages to develop and test machine learning models, analyzing their outcomes for accuracy, scalability, and many other factors. In that process, they often download software packages directly to their laptops to run them there.

This situation puts IT administrators at a disadvantage because public sources come with additional risks that must be monitored and mitigated constantly.

Look for an AI platform with maintainers and authors who are experts in the tools and techniques that your team would use to take machine learning models from the build stages to high-performing production deployments. Great AI platforms will have a proven track record of monitoring CVEs in open-source packages and tools using both automation and manual curation, incorporating bugs and feedback reported from the open-source community.

5. Support

Too often one of the last capabilities that enterprise buyers look for, support is a critical element of any AI platform. Especially when teams are working with open-source software, they will need reliable bug reporting and tracking, engaging training resources, and ongoing support.

Look for an AI platform that provides the support your team will need, from onboarding support to resources for learning, to just-in-time support when you need it. Make sure the organization that builds and maintains your AI platform prioritizes enterprise support and is capable of delivering it at scale, even in highly regulated industries that face significant penalties for data breaches or adverse effects from their use of applied AI.

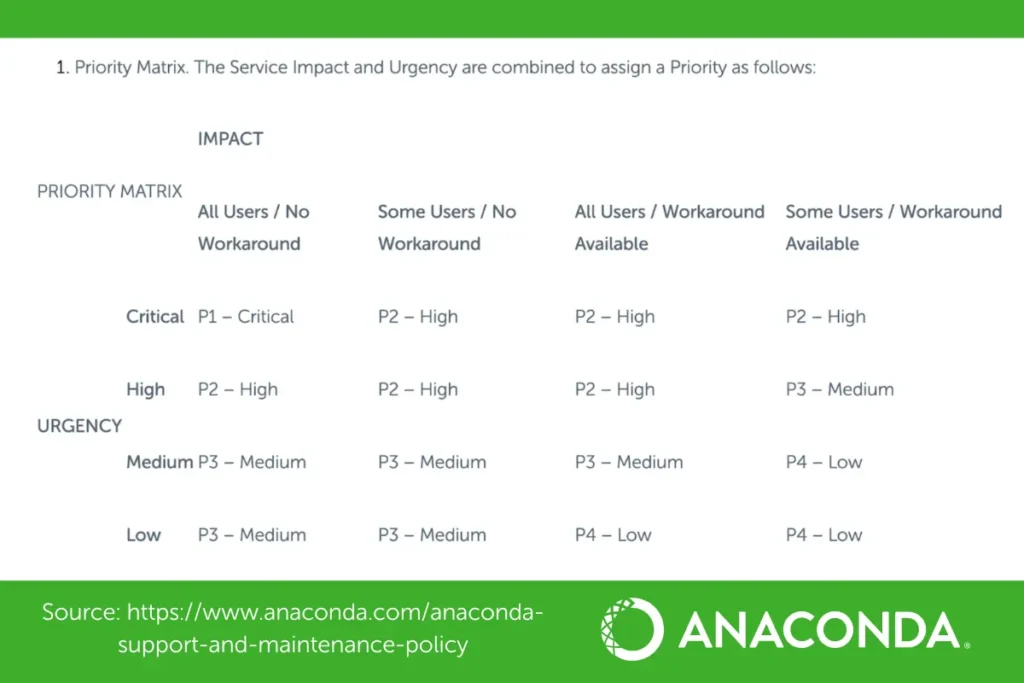

The best AI platforms will provide you with a priority matrix for support provision, such as the one in the example below.

6. Open-Source Tools

A great AI platform will have integrations that support the use of open-source software for data science and machine learning. Look for an AI platform that provides easy access to tools like Jupyter Notebook, Keras, Matplotlib, Scikit-learn, and TensorFlow.

Some platforms will allow you to centralize workflows on these tools, which means your data science and ML practitioners can collaborate, share data, and compare models, and your IT administrators can provision accounts, govern access and ensure security.

Look for an AI platform that gives you easy access to open-source tools and repositories. Make sure they are tracking CVEs on those platforms by version, package, and date. The best AI platforms are built and maintained by organizations and teams that stay entrenched in the open-source community by contributing research, resources, and talent—adding to the diversity of talent and research and increasing the volume of innovative technologies available for data science and machine learning practitioners.

7. Open-Source Contributions

Look for an AI platform that maintains strong connections to the open-source community and its contributors. The best AI platforms will be deeply connected to the open-source community to support innovation and robust security with vulnerability curation by their team and the ecosystem. It’s even better when authors of open-source software are employed by the organization that runs the AI platform you chose.

Keep in mind, there are many ways an organization can contribute to the open-source community. Some organizations invest in open-source software by creating integrations with or embedding access to key tools in their offerings. Others contribute to open source by sharing best practices in content that is easily accessible to data science and machine learning practitioners.

Some organizations hire developer relations (DevRel) teams with experts on aspects of open-source data science, software development, and machine learning and collaborate across the ecosystem on content to help educate the community.

Questions to Ask Your AI Platform Provider

Ask potential technology providers these questions around IT enablement and governance, security, open source, and more.

Automation

1. What is required to integrate open-source orchestration tools into the AI platform?

IT Enablement and Governance

2. Describe the system in place to track data lineage and monitor data quality. Do you have tools to manage data catalogs, metadata, and other artifacts?

3. How do you secure data to comply with Europe’s GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act) and other privacy regulations?

Scale

4. How will our data scientists create an environment for experimentation and model training? Describe the steps.

5. How easy and efficient is it to set up an online prediction system with your platform?

6. What kind of hardware support do you provide? What is the cost of that hardware?

Security

7. What does access control look like with this platform? Describe the steps an administrator would take to provision an account or deactivate access.

8. How does your platform track logging, monitoring, and alerts? Describe the process for these capabilities for an IT administrator.

9. How many levels deep does your team monitor common vulnerabilities and exposures (CVEs) that affect users of your platform?

Support

10. How much user effort is required in onboarding?

11. Is training available on an ongoing basis? Where do users access the training, and how often are materials added to the training repository?

12. Describe the process for engaging Anaconda’s support team on an urgent, technical issue that affects our customers or users. How do you prioritize outages and their impacts?

Open-Source Tools

13. How would an IT administrator provision open-source tools for data scientists and engineers—such as Jupyter Notebook, MLflow, Scikit-learn, or TensorFlow—using your platform?

14. How does your platform secure the packages that our data scientists are using?

Open-Source Contributions

15. Do you contribute to the open-source community? Describe how your organization is involved in the open-source ecosystem.

Anaconda: Secure, Centralized Solution for Python

With Anaconda, your data scientists can focus on doing science with data—not toiling in DevOps, software engineering, and IT tasks. One platform provides all of the tools they need to connect, share, and deploy projects.

Anaconda’s platform automates your organization’s AI pipelines from laptops to training clusters to production clusters with ease. Anaconda supports your organization no matter the size, scaling from a single practitioner using one laptop to thousands of machines. Anaconda automates the undifferentiated heavy-lifting, the glue code that prevents organizations from rapidly training and deploying models at scale.

With Anaconda, your data scientists can focus on doing science with data—not toiling in DevOps, software engineering, and IT tasks. One platform provides all of the tools they need to connect, share, and deploy projects.

From an IT perspective, Anaconda provides automated AI pipelines. Anaconda’s cloud-native architecture makes scaling simple. Security officers can be confident that all data science assets—packages, projects, and deployments—are managed securely with appropriate access control configured automatically.

FAQ

What is an AI platform?

An AI platform is a software solution that enables businesses to develop and deploy AI-powered applications. It typically includes a set of tools and services for data scientists, developers, and business users, as well as a runtime environment for deploying AI models.

Some popular AI platforms include Google Cloud Platform (GCP), Amazon Web Services (AWS), IBM Watson, Microsoft Azure, and Anaconda. Each of these platforms offers a different set of features and services, so it’s important to choose one that best meets the needs of your organization.

How can I understand how to build an AI platform?

There is no one-size-fits-all answer to this question, as the best way to build an AI platform depends on the specific goals and requirements of the project. However, there are some general tips that can help you get started.

First, it is important to have a clear understanding of what you want your AI platform to achieve. What are the specific tasks or goals that you want it to accomplish? Once you have a good understanding of your goals, you can start to research which AI technologies will be best suited for your needs.

It is also important to consider how your AI platform will be deployed and used. Will it be deployed on-premises or in the cloud? Will it be used by internal teams or made available to external customers? These factors will influence the architecture of your platform and the technologies that you use.

Finally, don’t forget to consider the cost of building and maintaining your AI platform. Depending on the size and complexity of your project, this can be a significant expense. Make sure to budget accordingly and remember that early versions of your platform may not be perfect—so don’t be afraid to iterate and experiment as you continue to develop it.

What is a conversational AI platform?

A conversational AI platform is a software application that enables users to interact with AI agents in a natural way, using conversation as the primary interface. The goal of a conversational AI platform is to make it easier for people to access and use AI services, by providing a more user-friendly interface than traditional text-based or graphical interfaces.

Conversational AI platforms typically provide a set of tools and services that allow developers to build, train, and deploy chatbots or virtual assistants. These platforms usually offer some degree of integration with existing messaging applications, such as Facebook Messenger or Slack. In addition, many conversational AI platforms offer APIs that allow developers to integrate their chatbots or virtual assistants into other applications or services.

Most conversational AI platforms are based on machine learning techniques, which allow the chatbots or virtual assistants to improve their performance over time through experience. Some popular conversational AI platforms include Amazon Lex, Google Dialogflow, IBM Watson Assistant, and Microsoft Bot Framework.

What is Google’s AI platform?

Google’s AI platform is a comprehensive set of tools and services that enables developers to build, train, and deploy machine learning models. It includes both hardware and software components, as well as a variety of cloud-based services.

What is the best AI platform?

Some popular AI platforms include Google Cloud Platform (GCP), Amazon Web Services (AWS), IBM Watson, and Microsoft Azure. Practitioners and organizations that want to leverage the innovation, security, and support of the open-source community choose Anaconda, which allows them to build and deploy solutions using Python. Each of these platforms offers a different set of features and services, so it’s important to choose one that best meets the needs of your organization.

What is Microsoft’s AI platform?

Microsoft’s AI platform is a comprehensive set of tools and services that enable developers to build intelligent applications. It includes Azure Machine Learning, which allows developers to build, train, and deploy machine learning models.

How is an AI platform used?

AI platforms can be used to build a variety of applications, including chatbots, virtual assistants, recommendation engines, predictive maintenance systems, and fraud detection solutions. Many of these applications are powered by machine learning algorithms that learn from data and improve over time.