Why Work Locally?

In a world of ever-growing AI models with hundreds of billions of parameters, a lot of industry focus is placed on the extremes of computing. We read about companies working with petabytes of raw data, trillions of filtered data points, and the tools they use to train on clusters that cost $500K a day to rent. While these efforts represent an exciting niche of AI and ML, there’s a tremendous number of data tasks that do not require the cost or complexity of mega-scale AI projects. How should a data scientist tackle much more common problems where the scale might be “only” 10-100 GB of data? The answer might be simpler than you think!

We’ve learned a lot about how customers do their work over the years, and we’ve found that many data scientists begin their projects on their own laptops. They stage the data they are analyzing on the laptop’s internal storage and process it using Python scripts or Jupyter Notebooks running on the laptop itself. But why work this way, when the cloud is so ubiquitous and powerful? The biggest reason is avoiding friction. No matter how easy, provisioning a cloud server requires some effort (and sometimes approval), and getting data and software packages to and from that server is an additional chore and cost. A data scientist’s own computing device is always provisioned for their use, preloaded with the tools they prefer, and has few incremental costs to justify. Laptop users can freely switch between their favorite desktop productivity apps and command line tools as they work, which can be very helpful when writing documents or presentations with analysis results. Additionally, the quality of the internet connection is not usually a concern with local computation, which is especially important for hybrid workers who frequently work from different locations.

How is this possible? It turns out that both computer hardware and data science software have been undergoing a quiet revolution.

The Data Science Workstation

The humble computer has increased in capability significantly in the past few years. Three major trends make a modern workstation quite the powerhouse for data science and AI:

- Increases in processing power and performance: At the heart of all data science, ML, and AI tasks are massively parallel calculations that can take advantage of additional CPU clock speed, processing cores and CPU/processor memory (cache). Some tasks can also scale to the latest GPUs.

- Expanding memory options: High-end workstations like the Lenovo ThinkStation PX can now be configured with up to 4TB, and mobile workstations like the Lenovo ThinkPad P16 Gen 2 can support up to 192GB of memory, enabling users to manipulate some pretty large datasets, entirely in memory.

- Fast and large disk storage: High-end Lenovo workstations have transitioned to extremely fast PCIe NVMe storage, supporting capacities up to 60TB per workstation. This ultra fast storage is capable of gigabytes/second of read performance, making it ideal for many data science tasks. For those situations where internal storage isn’t big enough, Thunderbolt 4 and 25GbE ethernet are supported for fast access to external storage enclosures.

Put all of this together, and a workstation can be an effective tool to efficiently load large data sets into memory and process them in parallel to get results, fast.

In this blog, we are going to be benchmarking a selection of mobile and desktop workstations from Lenovo. We’ll test these in a few different data science and AI scenarios to highlight where different size, weight, and specifications for devices can have an impact in your workflow.

For mobile workstations these laptops represent two different tradeoffs on the spectrum between power and portability. Although available in a wide variety of configurations, we specifically tested the following.

Laptop/Mobile Workstation #1: Lenovo ThinkPad P1 Gen 7

CPU: Intel Core Ultra 9 (14 cores, 18 threads)

NPU: Intel® AI Boost w/integrated Intel® Core Ultra Processor – Max. 11 TOPS

GPU: Mobile NVIDIA RTX 3000 Ada Gen. Laptop GPU (8GB GDDR6 VRAM)

Memory: 64GB (Max. 64GB) DDR5 LPCAMM

Storage: 4 TB PCIe Gen4 NVMe

Technical Specifications on Lenovo.com

Product Page on Lenovo.com

Laptop/Mobile Workstation #2: Lenovo ThinkPad P16 Gen 2

CPU: 14th Generation Intel Core i7-14700HX (20 cores, 28 threads)

NPU: N/A

GPU: Mobile NVIDIA RTX 5000 Ada Gen. (16GB GDDR6 VRAM)

Memory: 32GB (Max. 192GB) DDR5

Storage: 1TB PCIe Gen.4 NVMe

Technical Specifications on Lenovo.com

Product Page on Lenovo.com

The Lenovo ThinkPad P1 is notable because it puts a lot of functionality in a very portable form factor, weighing in at only 4 lbs. The ThinkPad P16, on the other hand, offers one of the fastest CPUs and mobile GPUs available in any laptop form factor; however, it weighs in at 6.5 lbs.

Desktop/Fixed Workstation #3: Lenovo ThinkStation P5

CPU: Intel Xeon W7-2595X (26 cores, 52 threads)

NPU: N/A

GPU: NVIDIA RTX 5000 Ada Gen. (32GB GDDR6 VRAM)

Memory: 128GB (Max. 512GB) DDR5

Storage: 1TB PCIe Gen.4 NVMe

Technical Specifications on Lenovo.com

Product Page on Lenovo.com

Desktop/Fixed Workstation #4: Lenovo ThinkStation P7

CPU: Intel Xeon W9-3475 (36 cores, 72 threads)

NPU: N/A

GPU: NVIDIA RTX 6000 Ada Gen. (48GB GDDR6 VRAM)

Memory: 256GB (Max. 2TB) DDR5

Storage: 1TB PCIe Gen.4 NVMe

Technical Specifications on Lenovo.com

Product Page on Lenovo.com

The ThinkStation P5 & P7 are more powerful desktop workstations better suited to demanding for AI workloads.. These can be accessed 1:1 as a desktop workstation, or accessed remotely as a secure, sandbox development environment for projects that can sometimes grow in size or complexity throughout their lifecycle. Perfect for augmenting any rising compute costs from the cloud.

Our test ThinkStation P5 featured a powerful Intel Xeon CPU, 128GB memory, and a 32GB GPU.

Our test ThinkStation P7 featured a more powerful specification, with increased CPU performance, double the system memory, and the fastest desktop GPU for AI workloads with a whopping 48GB of VRAM.

Anaconda: Data Science Wherever You Need It

Fast hardware is only half of the solution for doing data science right on your desk. Data scientists also need software that solves their problems, regardless of what platform they are using. In the case of laptops, especially enterprise-issued laptops, this means Windows support. Anaconda offers thousands of open-source data science packages for Windows, Mac, and Linux operating systems. These packages can be installed and updated using the conda package manager, which allows users to manage their software environments for all of their data science projects. Thanks to conda, Anaconda’s millions of users get the same experience on all platforms and can install the open-source packages they need in the same way, regardless of operating system.

In fact, Windows users now have two different ways to install Anaconda. The most common approach is to use the Windows installer for Anaconda. This gives access to native Windows builds of all the open-source packages available in Anaconda and is the most popular choice among Anaconda users. However, the Linux version of Anaconda can also be installed on Windows systems using the Windows Subsystem for Linux (WSL). WSL version 2 provides a lightweight, virtualized Linux installation that is tightly integrated with the Windows operating system. Linux applications running in WSL 2 can access Windows files and even display graphical interfaces on the Windows desktop. While Anaconda is available for Windows natively, many GPU-accelerated packages like NVIDIA RAPIDS and Anaconda’s GPU-enabled builds of PyTorch are only available for Linux. In many cases, these Linux-only tools can be used in WSL 2, including NVIDIA GPU support. Microsoft makes it very easy to install WSL 2 on any Windows system, but it requires administrative access and so may not be available to laptop users with enterprise managed hardware. This is why we suggest the Windows-native version of Anaconda for most users, especially if you are new to Anaconda, but WSL for users who care about GPU acceleration.

| Microsoft Windows | Microsoft Windows w/WSL2 & Linux | Native Linux OS | |

| Standard AI Packages | ✓ | ✓ | ✓ |

| CPU Optimized Packages | ✓ | ✓ | |

| GPU Optimized Packages | ✓ | ✓ |

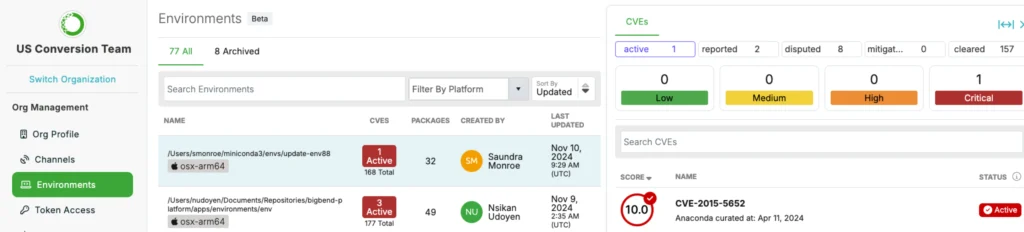

A Note to IT Managers

One group that might be less excited about the proliferation of data science work on employee laptops is IT. It can seem easier to manage security on a centralized set of cloud servers than on a distributed set of employee laptops. However, Anaconda has you covered here with our Package Security Manager (PSM), included with Anaconda’s Business tier. PSM allows IT to centrally manage the open-source data science packages that users can install onto their laptops, workstations, and servers. IT can apply whatever license and security policies they need to curate the selection of packages made available to their organization via PSM, and end users can continue to use conda to manage environments and install packages on their laptops, just as they would on any other computer.

IT administrators can also get insights into which packages are the most popular and see when new security vulnerability reports are posted so they can remediate emerging security threats. Letting users do data science on their laptops doesn’t have to be a headache with the right governance tools.

Local AI Performance

To illustrate the AI potential of a mobile workstation, especially with a dedicated GPU, we’re going to look at some PyTorch tasks. Mobile workstations are also excellent at many other data science tasks, like data preparation and more traditional machine learning; however, the GPU performance has made the biggest improvements in recent years.

Anaconda offers two different builds of PyTorch, one only for CPU-based computation and one with support for NVIDIA GPUs. The CPU builds of PyTorch are available for all platforms, and the NVIDIA GPU support is only available for Linux but can be installed on Windows with WSL.

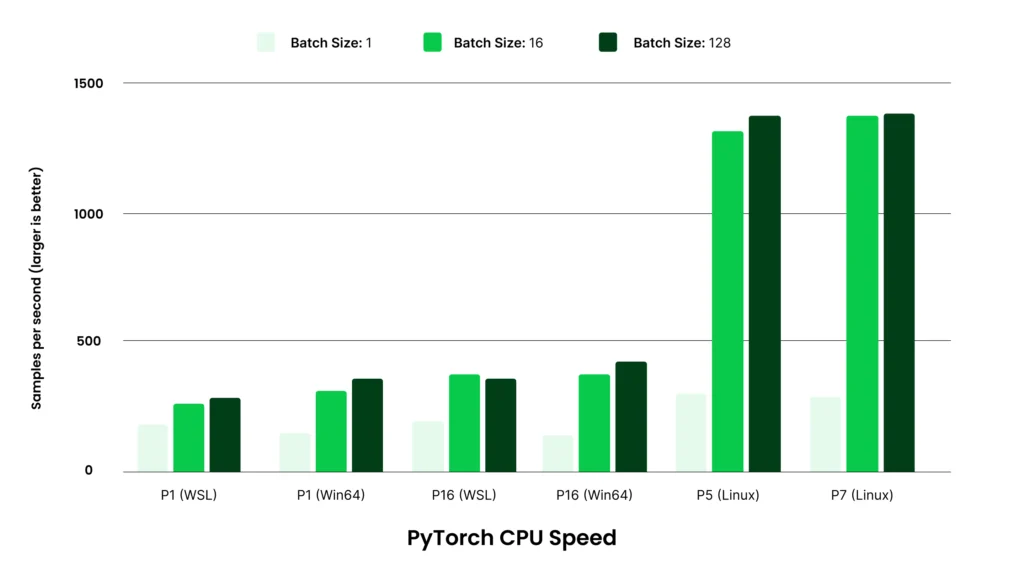

Let’s first test a smaller text encoding model (“BERT”) training on the CPU. Note the following terms used in the chart labels:

- “P1”: ThinkPad P1

- “P16”: ThinkPad P16

- “P5”: ThinkStation P5

- “P7”: ThinkStation P7

- “WSL”: Windows Subsystem for Linux executables

- “Win64”: Native 64-bit Windows executables

- “Batch Size”: The number of data samples evaluated at once, either for training or inference purposes

From these results we can learn a few things:

- There is not a large or consistent difference between CPU performance on WSL vs. Win64. For a batch size of 1, WSL is slightly faster. For larger batch sizes, Win64 is faster.

- There is some improvement in performance with batch sizes greater than 1 on the CPU, but beyond that, there is not a major change for even larger batch sizes.

- The ThinkPad P16 is the speed winner on the CPU relative to the ThinkPad P1, but only by about 20% in the most favorable case.

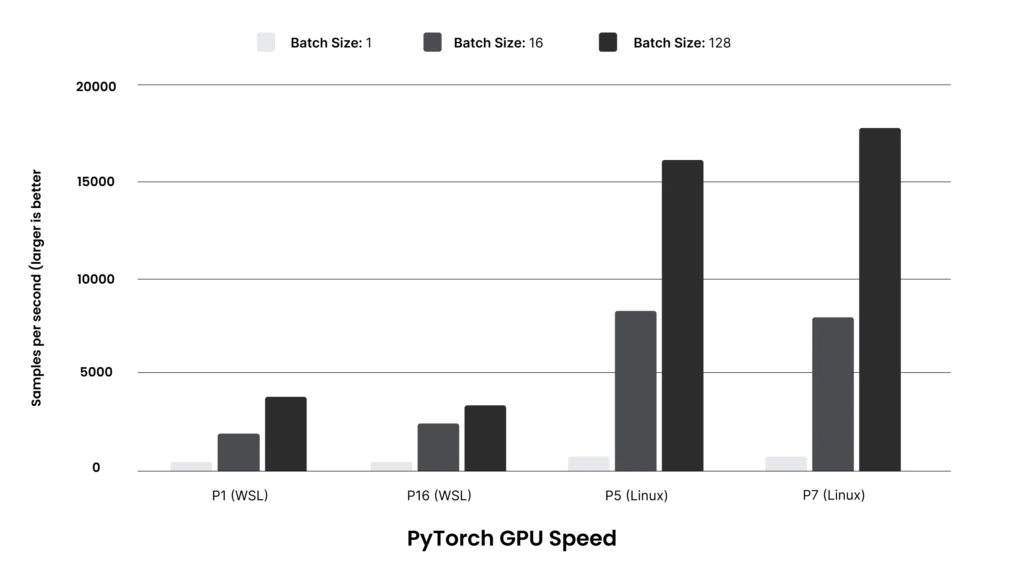

For assessing GPU performance, we must use WSL to run the appropriate NVIDIA GPU-enabled build, but we immediately see a huge speed improvement:

The first thing to note is the vertical scale difference. Batch sizes of 1 on the GPU are already 1.7x to 2.3x faster than the CPU. Also different from the CPU case, increasing the batch size makes a huge difference in throughput. The GPU has a significant amount of parallel computing power, and in this case, the model is too small to make full use of the GPU with only a single sample at a time. Batching up the work activates more of the GPU’s capabilities and makes a major difference. However, it is curious that despite the differences between the ThinkPad P1 and P16 GPUs, the throughput is not significantly different.

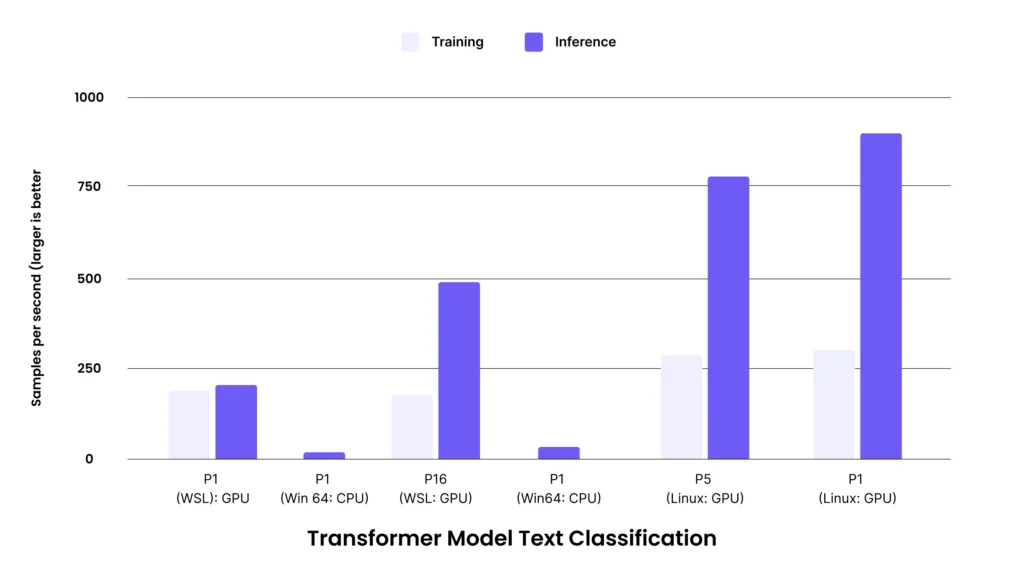

To understand this, we can move to an even larger model using the transformers library, which is used to implement most large language models (LLMs) on top of AI packages like PyTorch. The library includes an example using a larger BERT model from HuggingFace to do fine tuning and inference. The performance of that model gives the following results:

As a more complex model, the number of samples per second has dropped, but the relative performance shows the following characteristics:

- CPU performance is now much slower. For this size model, there isn’t much point in using the CPU at all.

- The capability of the higher performance (and more power hungry) GPU in the ThinkPad P16 shows through. For training, the GPU in the P16 is more than 2x faster than the GPU in the ThinkPad P1. For inference, the spread is even larger, with the GPU in the ThinkPad P16 able to do inference 6x faster than the GPU in the ThinkPad P1.

Overall, the key takeaway from the benchmarks in this section is that the GPUs available in these ThinkPads can be extremely helpful for AI workloads. The bigger and more complex the model, the more benefit the GPU, and larger GPUs, will have. On the flip side, for running simpler models the CPU or a lower power GPU might be fine, and there is not necessarily proportional benefit to using a larger GPU.

Easy Migration from Local to Cloud

A project can start small but sometimes grow beyond the capabilities of a portable computer. In those cases, it does make sense to scale to more powerful desktop workstations, capable of multiple CPUs, 1-4x GPUs, and multiple concurrent connected data science users. This can support a strong on-premises, secure workflow for AI development, and you can then scale to cloud servers for final stage deployment of a data science project. In this case, conda makes it possible to recreate your package environment on a remote system using an environment description file, even if your local laptop is running Windows and the remote workstation is running Linux. Then you can resume your work on that remote system and continue with your work confidently, as you were able to debug your code and prove out the analysis on your local laptop.

Conclusion

Workstations have become amazing productivity tools for data scientists, as they have gained more CPU cores, better GPUs, extremely fast storage, and large amounts of RAM/memory. Open-source packages PyTorch and Transformers can make excellent use of these expanded hardware capabilities on common AI tasks.

Anaconda’s support for these popular open-source data science packages (along with thousands of others) on Windows as well as Linux makes it easy to get started with data science on your Lenovo ThinkPad or ThinkStation Workstation. Enterprise IT can also manage the Python software available to their organization’s devices directly using Anaconda’s Package Security Manager, ensuring that IT policies are followed even in a distributed workforce. The combination empowers data scientists and AI professionals with cutting-edge open-source innovation while helping IT meet the security and budget requirements their leaders demand.